The short answer is YES. The longer answer is that the devil lies in the details, so keep on reading to know more about it.

Why compare ZeroGPT with other AI detectors?

As we all know, ZeroGPT is one of the many AI writing detectors out there. However, just because it is out there doesn’t mean it is better than everyone else. That’s why we tested ZeroGPT along with 3 other popular detectors: GPTZero, Turnitin’s AI detection, and Winston AI. We collected 160 short texts (82 AI-written & 78 human-written) and recorded how each tool labeled these texts - whether they flagged them as “AI” or “Human,” along with confidence scores from 0–100. The results might surprise you.

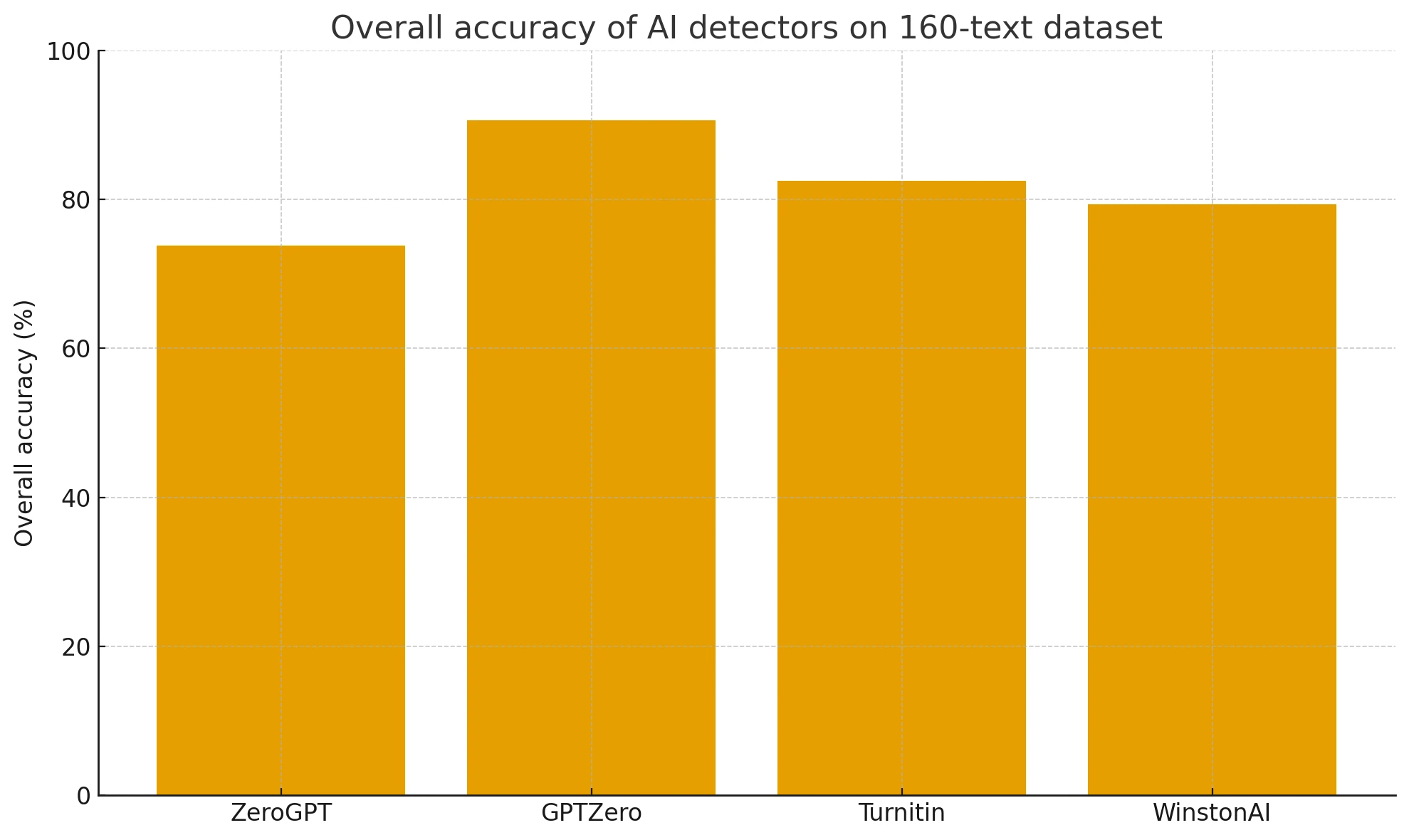

Overall Accuracy Results

We tested a total of 160 short texts (78 were human texts, 82 were AI texts) across all four detectors. Here’s a summary of what we found:

| Tool | Overall | AI-text | Human-text | False positives | False negatives |

|---|---|---|---|---|---|

| ZeroGPT | 73.8% | 68.3% | 79.5% | 20.5% | 31.7% |

| GPTZero | 90.6% | 82.9% | 98.7% | 1.3% | 17.1% |

| Turnitin | 82.5% | 67.1% | 98.7% | 1.3% | 32.9% |

| Winston AI | 79.4% | 65.9% | 93.6% | 6.4% | 34.1% |

These numbers might look all fancy at first but it’s quite simple if you break it down:

- Overall accuracy is the percentage of times a tool guessed right.

- AI-text accuracy is how many times a tool correctly flags AI-generated text as AI.

- Human-text accuracy is how many times a tool correctly says a text was written by a human.

- False positives means how many times a detector wrongly labeled a human text as AI.

- False negatives means how many times a detector wrongly labeled an AI text as human.

Big‐picture takeaways

From the table, it’s clear that GPTZero outperformed every other tool overall with 90.6% accuracy. Turnitin came second at 82.5%, Winston AI next at 79.4%, and ZeroGPT is last at 73.8%.

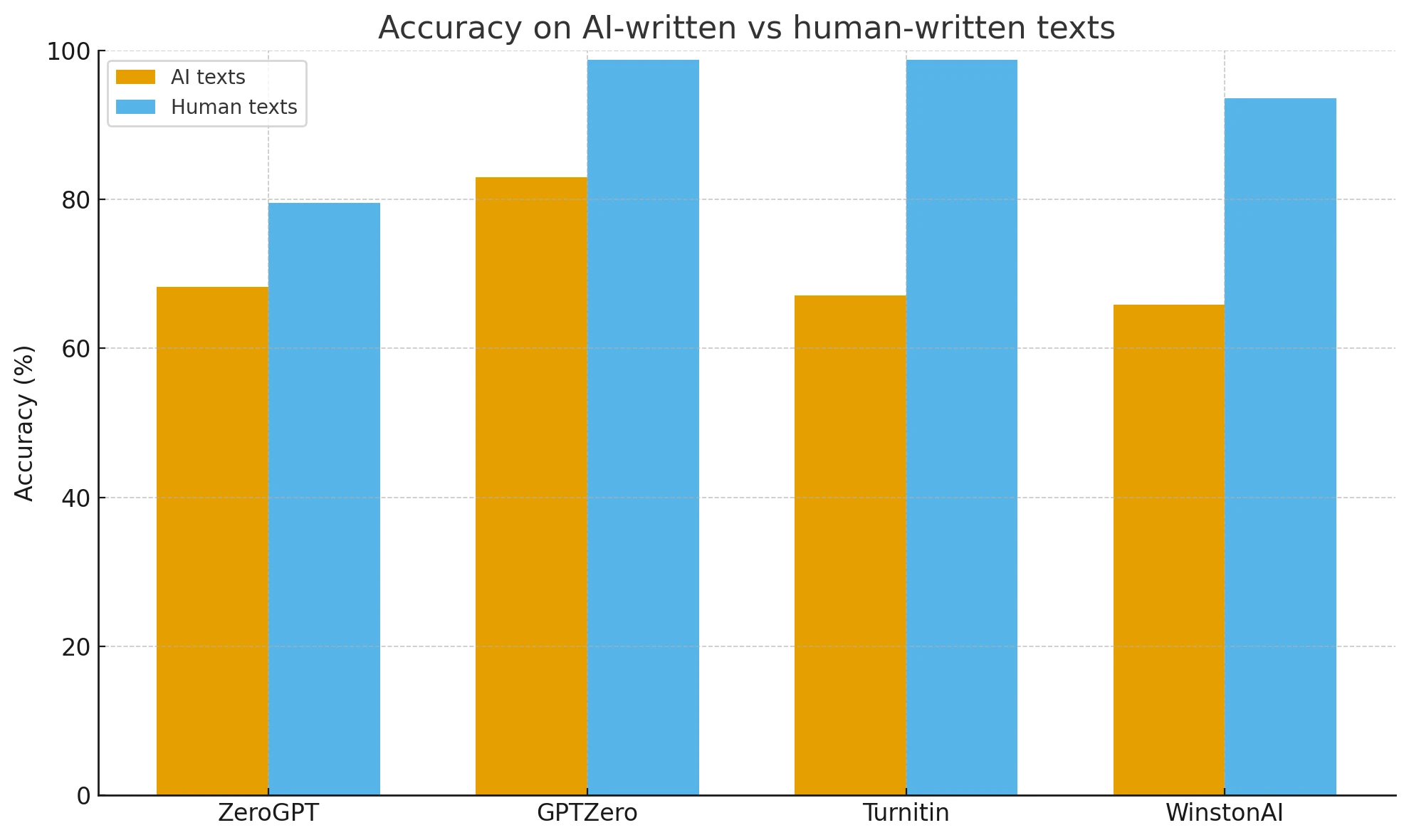

One big takeaway is that all four detectors do a better job detecting genuinely human-written text than AI text. For instance:

- GPTZero was correct on 99% of human texts.

- Turnitin was also 99% on that front.

- Winston AI was 94%.

- ZeroGPT’s human-text accuracy was 79%.

However, ZeroGPT showed the highest false-positive rate (20.5%), meaning it labeled more than 1 in 5 human texts incorrectly as AI.

Also Read: Which is better GPTZero or ZeroGPT?

All these tools also missed a chunk of AI texts:

- GPTZero mislabeled about 17% of AI texts as “Human.”

- Winston AI mislabeled around 34% of AI texts.

- Turnitin mislabeled 33% of AI texts.

- ZeroGPT mislabeled nearly 32%.

Score distributions (and what they mean in simpler terms)

- ZeroGPT’s “AI-ness” score (0–100):

- Average for AI text: ~72 (many AI texts hit 100).

- Average for human text: ~30 (but some soared up to 100).

- GPTZero’s “human-ness” score (0–100):

- AI texts: average ~20 (many scoring 0).

- Human texts: average ~98 (most near 100).

- Turnitin’s “AI score” (0–100):

- AI texts: average ~68 (wide spread).

- Human texts: mostly 0 (with a few outliers).

- Winston AI (higher means more human):

- AI texts: average ~34.

- Human texts: average ~90 (often above 95).

In simpler terms, a large gap between AI and human scores makes detection easier. GPTZero had such a gap, which explains its high accuracy. ZeroGPT’s overlap led to more false positives.

Also Read: Turnitin vs ZeroGPT

How ZeroGPT works (in simple language)?

- Perplexity: Measures how predictable the next word is. AI text tends to be more predictable (low perplexity).

- Burstiness: Measures variation in sentence length and style. Humans are usually more irregular than AI.

After these measures (and other hidden factors), ZeroGPT outputs a label (“AI-generated” or “Human written”) plus a confidence score from 0–100.

Also Read: Is Winston AI accurate like Turnitin?

Caveats & Practical Implications

- We only used 160 short texts; a small sample size.

- Different text lengths or writing styles might yield different results.

- Tools struggle with heavily edited AI content, very short text, and non-native English.

False positives can unfairly damage someone’s credibility. These detectors should act as a “smoke alarm.” If you see a flag, do deeper checks—talk to the writer, review drafts, or compare writing style.

A single opinion that might help

If you’re worried about false flags, GPTZero performed best at not mislabeling human texts in this dataset. ZeroGPT was the weakest on both AI and human texts. But remember, no detector is perfect—especially with short or edited content.

The Bottom Line

ZeroGPT, despite its claims, performed the weakest on this 160-text test. GPTZero topped the list, Turnitin did well, and Winston AI was in the middle. All detectors have flaws, so always include manual checks or conversations with the writer. They are detective tools, not the ultimate judge.

![[HOT] Can You Compare ZeroGPT With Other Top Tools?](/static/images/can-you-compare-zerogpt-with-other-top-toolspng.webp)