Last updated: December 27, 2025

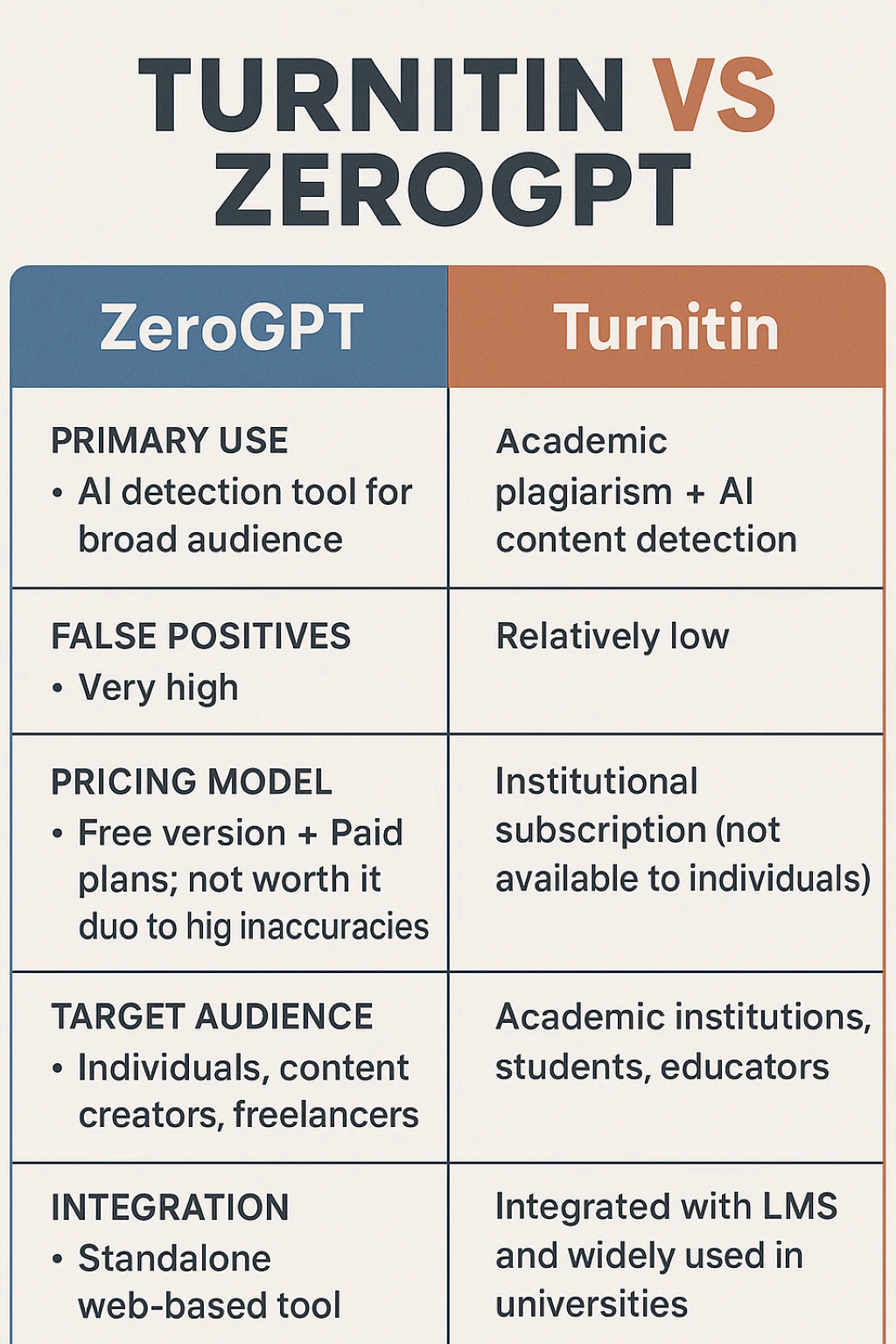

Turnitin has been the gold standard for plagiarism detection in academic institutions for years, and its AI-detection layer is now part of that broader ecosystem. ZeroGPT, on the other hand, is a much more accessible (and heavily marketed) AI detector aimed at individuals.

The short answer to “which is better?” is still: Turnitin comes out on top—but now I’m backing that up with hard numbers from a controlled accuracy test (details below). The longer answer is nuanced, because “better” depends on whether you care more about false positives (flagging human text as AI) or false negatives (missing AI text).

Why Compare Turnitin with ZeroGPT?

The simple reason: ZeroGPT positions itself as an AI detection tool for a broad audience, while Turnitin is built primarily for educational institutions. But the real question is which one does a better job at identifying AI-generated text without punishing genuine human writing.

My long-standing concern with ZeroGPT has been the same: it produces too many false positives. You can write a clean, grammatical paragraph and still get flagged as “AI.” For bloggers, students, and professionals, that’s not a minor issue—it directly affects trust.

Quick Summary (TL;DR)

- Turnitin: Very low false positive rate on this test (1.28%), strong overall accuracy (82.50%).

- ZeroGPT: Much higher false positive rate on this test (20.51%), lower overall accuracy (73.75%).

- Both tools: Similar AI recall (ability to catch AI) in this dataset (~67–68%).

Real Test Results (Accuracy, False Positives, and More)

To move beyond opinions, I ran a side-by-side accuracy test on the same dataset:

- Total samples: 160

- True AI samples: 82

- True Human samples: 78

- Positive class: “AI” (meaning we treat “AI” as the “positive” label when computing precision/recall/FPR/FNR)

What matters most in the real world:

- False Positive Rate (FPR): How often human writing gets wrongly flagged as AI.

- False Negative Rate (FNR): How often AI writing slips through as “human.”

- Accuracy: Overall correctness across both classes.

- Precision (AI): When the tool says “AI,” how often it’s actually AI.

- Recall (AI): How much AI the tool successfully catches.

- AUC: How well the numeric score separates AI vs Human overall (higher is better).

Metrics Table

| Metric (AI is “Positive”) | Turnitin | ZeroGPT |

|---|---|---|

| Accuracy | 82.50% (132/160 correct) | 73.75% (118/160 correct) |

| False Positive Rate (Human → AI) | 1.28% (1/78 humans falsely flagged) | 20.51% (16/78 humans falsely flagged) |

| False Negative Rate (AI → Human) | 32.93% (27/82 AI missed) | 31.71% (26/82 AI missed) |

| Precision (AI) | 98.21% (55 true AI / 56 flagged as AI) | 77.78% (56 true AI / 72 flagged as AI) |

| Recall / True Positive Rate (AI) | 67.07% (55/82) | 68.29% (56/82) |

| Specificity / True Negative Rate (Human) | 98.72% (77/78) | 79.49% (62/78) |

| F1 (AI) | 79.70% | 72.73% |

| AUC (Score Separation) | 0.874 | 0.805 |

Confusion Matrices (Counts)

Turnitin

| Predicted Human | Predicted AI | |

|---|---|---|

| True Human | 77 (TN) | 1 (FP) |

| True AI | 27 (FN) | 55 (TP) |

ZeroGPT

| Predicted Human | Predicted AI | |

|---|---|---|

| True Human | 62 (TN) | 16 (FP) |

| True AI | 26 (FN) | 56 (TP) |

Visual Comparison (Bar Chart)

The chart below highlights the biggest practical difference: false positives. Turnitin almost never flags human writing as AI in this test, while ZeroGPT flags a sizable chunk of humans.

Turnitin’s Strengths in AI Detection (Backed by Data)

Turnitin isn’t perfect, but it’s clearly much safer on human writing in this test. The standout metric is the false positive rate:

- Turnitin FPR: 1.28% (1 out of 78 human samples flagged as AI)

- ZeroGPT FPR: 20.51% (16 out of 78 human samples flagged as AI)

That difference matters because false positives are the most damaging failure mode in education and professional settings. If you are a student, a researcher, or a legitimate writer, being incorrectly flagged can create avoidable friction even when your work is genuinely original.

Also, Turnitin’s precision is very high here: 98.21%. In plain English: when Turnitin says “AI,” it’s almost always correct in this dataset.

ZeroGPT’s Biggest Weakness: False Positives (Now Quantified)

ZeroGPT is accessible and fast, but the data matches what many users complain about: it flags human writing as “AI” far too often.

In this test, ZeroGPT’s false positive rate was 20.51%. That means roughly 1 in 5 human samples were incorrectly labeled as AI.

Even more importantly, this wasn’t just borderline scoring. In the dataset, several human samples received extremely high “AI” scores—exactly the kind of result that can scare users into thinking they did something wrong when they didn’t.

How They Work?

ZeroGPT

ZeroGPT relies on a blend of heuristic signals and NLP-style pattern analysis to estimate whether a piece of text has “AI-like” characteristics.

Typically, tools like this analyze factors such as sentence structure, stylistic consistency, and “predictability” (often discussed publicly as perplexity and burstiness). If the writing appears overly uniform or consistently polished, the detector may assign a higher likelihood of AI authorship.

The issue is that highly structured, well-edited human writing can look “machine-like” to an overly sensitive detector—which is one plausible reason ZeroGPT produces more false positives in practice.

Turnitin

Turnitin’s AI detection operates within the same workflow as its plagiarism infrastructure.

Once a document is uploaded, Turnitin runs a proprietary analysis that looks for patterns associated with AI-generated text. While the underlying method is not fully public, the system appears designed to be conservative in accusing human writing—consistent with the extremely low false positive rate observed in this test.

A Single Opinion That Matters (With a Reality Check)

If you’re a serious writer or a student who wants fewer false alarms, Turnitin is the more reliable option in this dataset. The most important practical difference is simple:

- Turnitin is far less likely to falsely accuse a human writer.

- ZeroGPT is far more likely to produce false positives.

However, note the tradeoff: both tools missed a meaningful share of AI samples (~32% false negatives). That’s why AI detectors should be treated as screening signals, not final verdicts.

Limitations (Transparency Section)

- This test is based on 160 samples (82 AI, 78 human). Results can vary with different topics, writing styles, and newer model outputs.

- AI detectors may change over time as vendors update models and thresholds.

- No detector should be used as the sole basis for disciplinary action. Human review and contextual evidence matter.

Frequently Asked Questions

Q1. Can I use Turnitin if my school doesn’t subscribe?

Not directly. Turnitin is typically licensed at the institutional level. If your university doesn’t provide access, you generally can’t subscribe individually through official channels.

Q2. Is ZeroGPT’s free version good enough?

It’s usable for casual checks, but the main issue is reliability. In this dataset, ZeroGPT falsely flagged 20.51% of human samples as AI. If accuracy matters (academics, client work, formal writing), that’s a major drawback.

Q3. Who should use Turnitin?

Turnitin is best suited for academic institutions and educators who need plagiarism detection plus AI screening, especially when false positives are costly.

Q4. Is Turnitin always right?

No. In this test, Turnitin still missed 32.93% of AI samples (false negatives). The key difference is that it almost never falsely accused human writing here (only 1.28% false positives).

Q5. Should I invest in ZeroGPT’s paid plan?

If your main reason for paying is “fewer false positives,” be cautious. The core issue is detection behavior itself: on this dataset, ZeroGPT produced many human→AI mistakes. Paying doesn’t necessarily change that underlying sensitivity unless the vendor explicitly provides a different model/threshold for paid tiers.

The Bottom Line

Both Turnitin and ZeroGPT are AI content detection tools, but in this controlled test, Turnitin was clearly more reliable overall—and dramatically better at avoiding false positives:

- Turnitin accuracy: 82.50%

- ZeroGPT accuracy: 73.75%

- Turnitin false positives: 1.28%

- ZeroGPT false positives: 20.51%

If you need consistency and a low chance of wrongly flagging human writing, Turnitin is the safer choice in this dataset. If your main mission is a quick, free check and you can tolerate a higher risk of false positives, you can try ZeroGPT—but you should not treat its output as definitive.

![[STUDY] Turnitin vs. ZeroGPT: How do they compare?](/static/images/turnitin-vs-zerogpt-featuredpng.webp)