As we all know, Turnitin’s AI detection is used by many students and teachers around the world. But, can Turnitin detect GPT-5? The short answer is YES. The longer answer is the devil lies in the details. Keep reading to know more about it.

Why does Turnitin detect GPT-5?

We tried a dataset of 180 passages produced by various GPT-5 models (like gpt-5, gpt-5-mini, gpt-5-nano, gpt-5-chat-latest).

Since OpenAI keeps changing their frontend for the ChatGPT website/app with different labels for their models, we decided to use the OpenAI API since it has clean and consistent labels. Also, when you are using the ChatGPT app/website, your requests are routed to one of these models, no matter what the labels say.

One important thing to note is that gpt-5, gpt-5-mini, gpt-5-nano are 'thinking' models with a dropdown menu to adjust the 'reasoning effort' to 'minimal', 'low', 'medium', or 'high'. We used the default setting of 'medium' for this test. gpt-5-chat-latest is the only non-reasoning model here. We also used the default settings (temp: 1, top_p 1) to collect samples from this model.

Just like ZeroGPT, Turnitin assigns an “AI score” from 0–100 for each passage, where a higher score probably means it’s more sure that the text is AI-written. Now, before you jump to any conclusion, let me warn you: Turnitin’s detection is not so reliable.

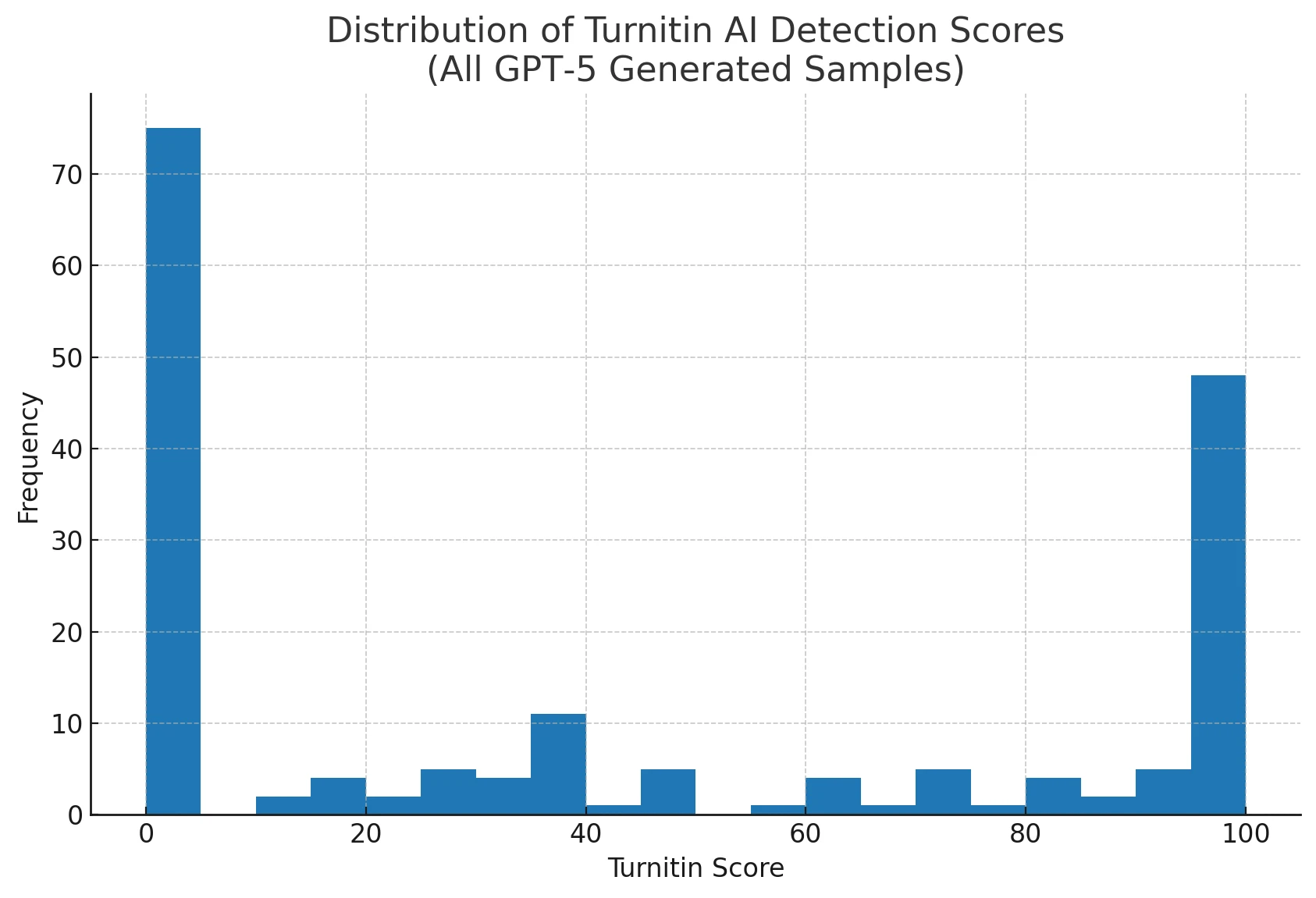

Turns out, only 39% of GPT-5 texts crossed Turnitin’s 50 score mark, which is the line they themselves consider as “likely AI.” This means 61% of pure AI writing (from GPT-5) basically slipped under the radar! This is a big deal because if you rely heavily on Turnitin’s detection, you might end up missing a lot of AI text or, in other words, you would not flag it when it is actually from GPT-5.

Also Read: Can GPTZero Detect GPT5?

Which GPT-5 variant gets detected more by Turnitin?

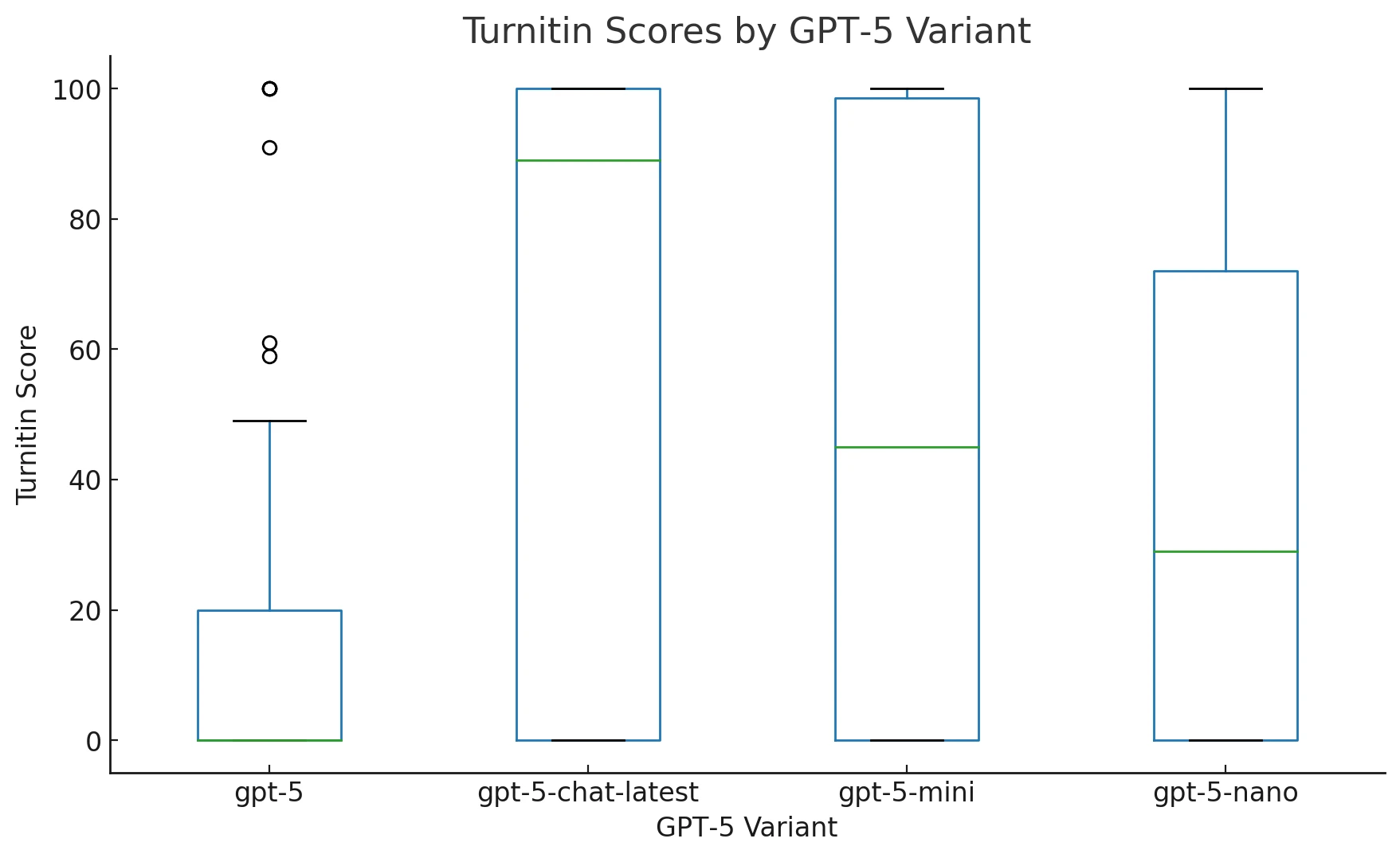

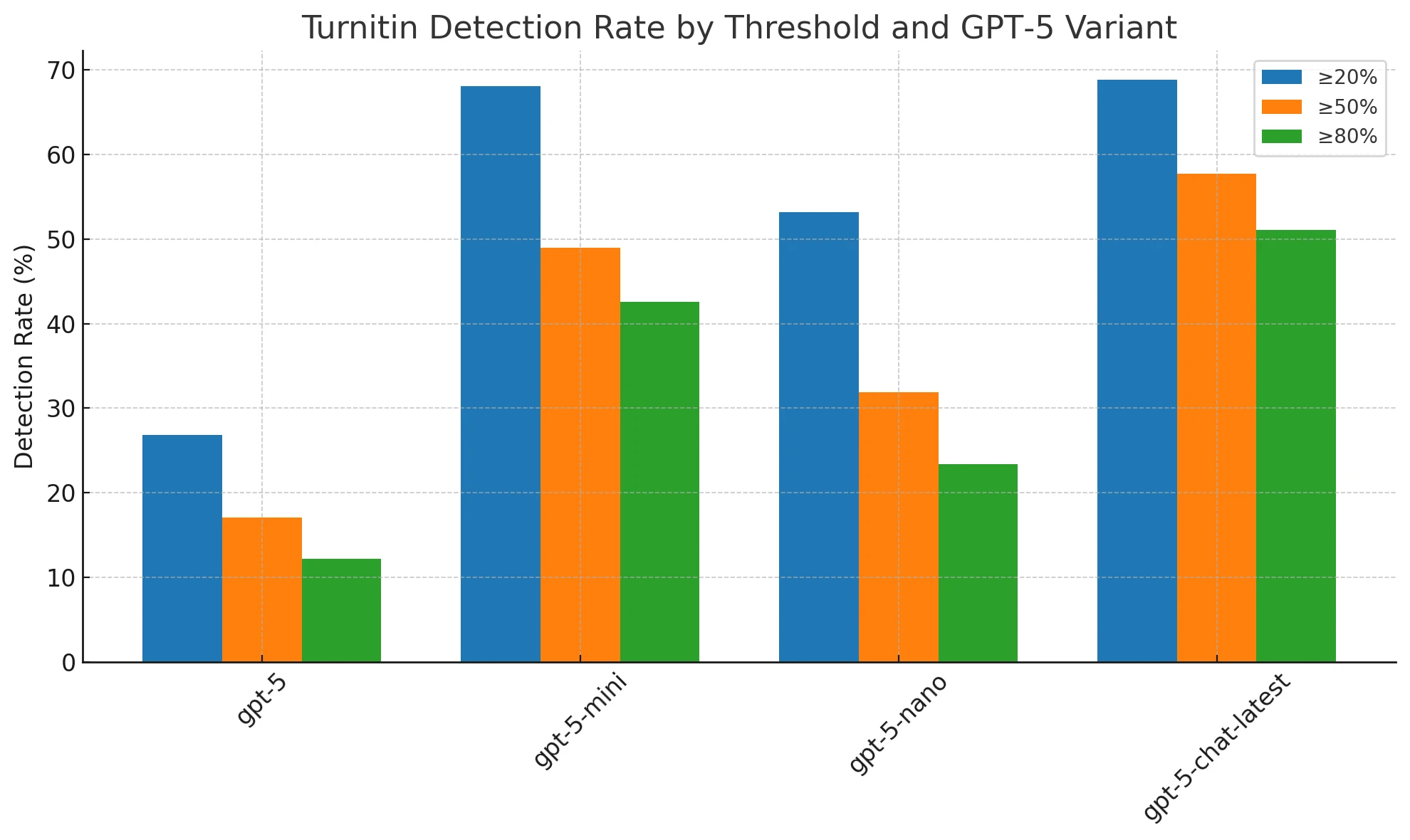

Interestingly, not all GPT-5 variants have the same detection rates. Here’s the twist: the flagship gpt-5 model is least likely to get flagged (only about 17% of passages crossed the ≥50% AI mark). Meanwhile, the chat-optimized gpt-5-chat-latest is worse for evading Turnitin but still ~42% of its passages escape even if Turnitin sets the bar at ≥80%. So, no GPT-5 variant is bulletproof here, but the flagship is actually even harder to detect.

Another surprising point: the distribution of scores is scattered across the entire 0–100 range, with an average near 42 and a big standard deviation around 43. In plain words, Turnitin sometimes hits near the maximum score and sometimes lands right at zero. It’s basically all over the place. That’s what we mean by bimodal.

Detection Rate Table

| GPT-5 Variant | ≥20% | ≥50% | ≥80% |

|---|---|---|---|

| gpt-5 | 26.8% | 17.1% | 12.2% |

| gpt-5-mini | 68.1% | 48.9% | 42.6% |

| gpt-5-nano | 53.2% | 31.9% | 23.4% |

| gpt-5-chat-latest | 68.9% | 57.8% | 51.1% |

| Overall | 55.0% | 39.4% | 32.8% |

Here’s what the columns mean:

- ≥20%: Turnitin’s AI score is 20 or higher

- ≥50%: Turnitin’s AI score is 50 or higher

- ≥80%: Turnitin’s AI score is 80 or higher

Visual Cues

Score distribution – demonstrates the two-cluster pattern.

Box-plot by GPT-5 variant – highlights that the flagship model’s median is near zero, while mini/chat variants skew higher.

Detection-rate bars – quantifies how success rates fall as we demand stricter thresholds (20 %, 50 %, 80 %).

The verdict

After looking at all this data, I can say that Turnitin is not reliable enough to be the final authority on whether something is GPT-5 text or not. Some people assume that an AI detector can catch everything automatically. However, these stats show that with a “generous” threshold of ≥20%, about 45% of GPT-5 text is not flagged at all. If you push the threshold to 50% or 80%, the false-negatives (real AI text that Turnitin doesn’t catch) only go up for the advanced variants.

Hence, if you have a “high-stakes” scenario where it is absolutely critical to confirm if GPT-5 wrote it or not, Turnitin should be just one tool in your kit. Pair it with a manual review or try multiple AI detectors. Because if it is not made to accomplish perfect AI detection, it won’t be able to do it. And that’s exactly what the data is telling us.

One single opinion that might help you in your academic or professional pursuits is to never rely on Turnitin alone for finding or proving AI content. It can be a helpful alarm bell, but stopping there can be risky. You should combine methods, read and assess the text yourself, and see if it has that “too perfect” feel.

The Bottom Line

Yes, Turnitin can detect GPT-5 but not with consistent accuracy. The detection rates vary by GPT-5 variant, plus the score distribution is all over the place. If you want to be absolutely sure, you either need to manually check or use a combination of tools. AI detection technology is evolving every day. This cat-and-mouse game isn’t ending soon. So, for now, if you truly want to bypass detection or confirm AI usage, don’t just trust Turnitin blindly. Take the data, weigh it carefully, and make your own final calls!