LLMs like ChatGPT, Claude, and Llama have revolutionized the content creation space and while many don’t like this sudden shift, it is abundantly clear that it is here to stay. The reason I say this is because these LLMs reduces human effort to a large degree which has been one of the main reasons behind adoption of so many things over the course of human history.

I know these LLMs are more than enough if anybody wants to create AI content. You can create 100s of blog posts in a matter of a few hours. You can fire almost all your content creators as these AI models are enough to get the job done quickly and efficiently. However, it is not all roses and sunshine as there are AI content detectors which can easily detect most of these AI content.

Although, AI detectors are not that accurate and are prone to false-positives. This gets particularly annoying when even those who still prefer to write their content by themselves get flagged by these AI detectors for the use of AI. This is where our undetectable content generator comes is picture.

If you are someone who regularly uses AI models to generate content and want to avoid AI detectors then you are at the right place.

What is Deceptioner’s undetectable AI content generator?

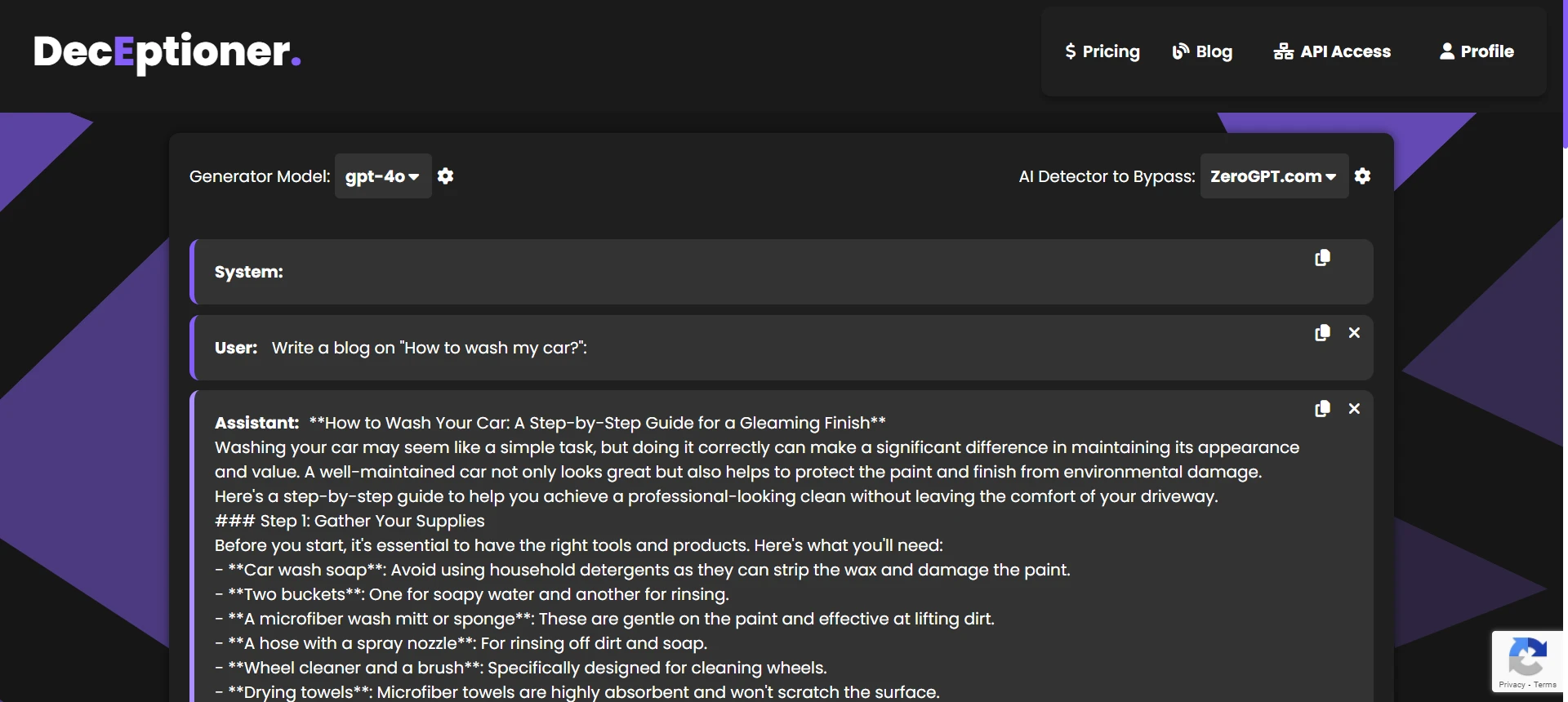

Deceptioner’s undetectable AI content generator does nothing different than a regular AI content generator or even ChatGPT, Claude, etc. The only benefit you would get out of using this one over others is that you most likely would be able to bypass AI detector of your choice without needing to rewrite/paraphrase it in any many manners.

Is it guaranteed to bypass my selected AI detector?

No, nothing is guaranteed in the world of machine learning, especially when it comes to LLMs. You will always need to check if the content generated by our generator bypasses your desired AI detector. The only advantage that you have by using our tool is that you increase your odds of bypassing AI detectors. Using our tool will also result in you not banging your head over the wall in order to bypass AI detectors and hence save some time.

How to use our undetectable AI content generator?

Let us first have a look at the different setting parameters that you can use to your advantage.

Generator Settings

1. Generator Model: As the name suggests this will be the model that would initially generate the content. You could select from a plethora of models like GPT-4o, Claude Sonnet 3.5, Llama 3.1 70B, GPT-4-Turbo, GPT-4, etc. We will continually add new models to it.

2. Temperature: If you are a LLM nerd you know it. For starters, this parameter basically controls the randomness of the output. If you set this to 0, you would get the most probable completion (as you might know LLMs are next word predictors). Also, it would remain the same if you try to get the completion for the same prompt multiple times. While setting it to anything above 0 will make it more random and will tend to give different completions each time you run it.

3. Max Tokens: Tokens are not words. According to OpenAI, you could consider 1 token to be equal to 3/4th of a word. Max Tokens parameter controls the maximum output tokens that the model can generate. If any model gives you incomplete completions, then you could try increasing the max tokens limit. However, there is a limit to how much you could increase. Our tool has a max tokens limit of 4000 for all of the listed models. If you feel that this is less you could contact us at support@deceptioner.site.

4. Frequency Penalty: Frequency Penalty basically penalises the model for using the same tokens (words) multiple times. It basically reduces the probability of the same words coming up multiple times. Also, with each occurrence of a word the probability of it coming up next time reduces. This parameter doesn’t work for Anthropic Claude models.

5. Presence Penalty: Presence Penalty forces the model to use a wider variety of words. For the end user it does the same thing as frequency penalty i.e. reducing the probability of a token appearing multiple times. However, it’s effects are not as pronounced as frequency penalty. This parameter doesn’t work for Anthropic Claude models.

Paraphraser Settings

1. AI Detector to Bypass: It is basically what the name suggests. You could select your preferred AI detector from the dropdown menu. The default value ‘None’ means that you would get what your selected ‘Generator Model’ generates without any changes whatsoever.

2. Stealth: The ‘Stealth’ parameters control how much stealthy do you want your text to be. Basically, with this parameter you are balancing between readability and how undetectable the text is.

System Prompt

This is exactly what it sounds. If you have used ChatGPT or Claude models then you would know it. System prompt basically tells the model to output text in a certain way. However, it is controversial that it works.

Pro Tips

1. Experiment with the generator models – If your only goal is to beat a certain AI detector then you need to checkout all the different models listed there. The best ones in my opinion are gpt-4o, claude-sonnet-3-5, and llama-3.1-70b. You could mix and match and see what works for you.

2. Play with the Frequency Penalty and Presence Penalty – Frequency penalty and Presence penalty can help if you are struggling to bypass any AI detector.

3. Experiment with the Stealth parameter – Sometimes setting the Stealth parameter to extremes like 0 or 1 works wonders. One of my friends who regularly uses the tool told that setting the parameter to 0 for the ZeroGPT.com mode worked like a charm. I don’t know if it works now since I didn’t like the output when I previously tested it by setting it to 0. However, you are free to try.

Conclusion

AI content generators have completely changed how we used to write content. I know there are many who absolutely hate AI generated content but the thing is even they won’t deny that it could help you in brainstorming ideas or overcoming writer’s block.

I don’t know at which point in the spectrum do you lie upon but one thing is abundantly clear that AI content generators are here to stay. With that AI detectors, and in turn tools to bypass AI detectors will also remain in the game.

To be honest, I really don’t know how the landscape will change over the coming years. However, it fills me with excitement of how the future for these AI generators might turn.