The short answer is NO. The longer answer is the devil lies in the details. Keep reading to know more about it.

Why the hype around JustDone AI Detector?

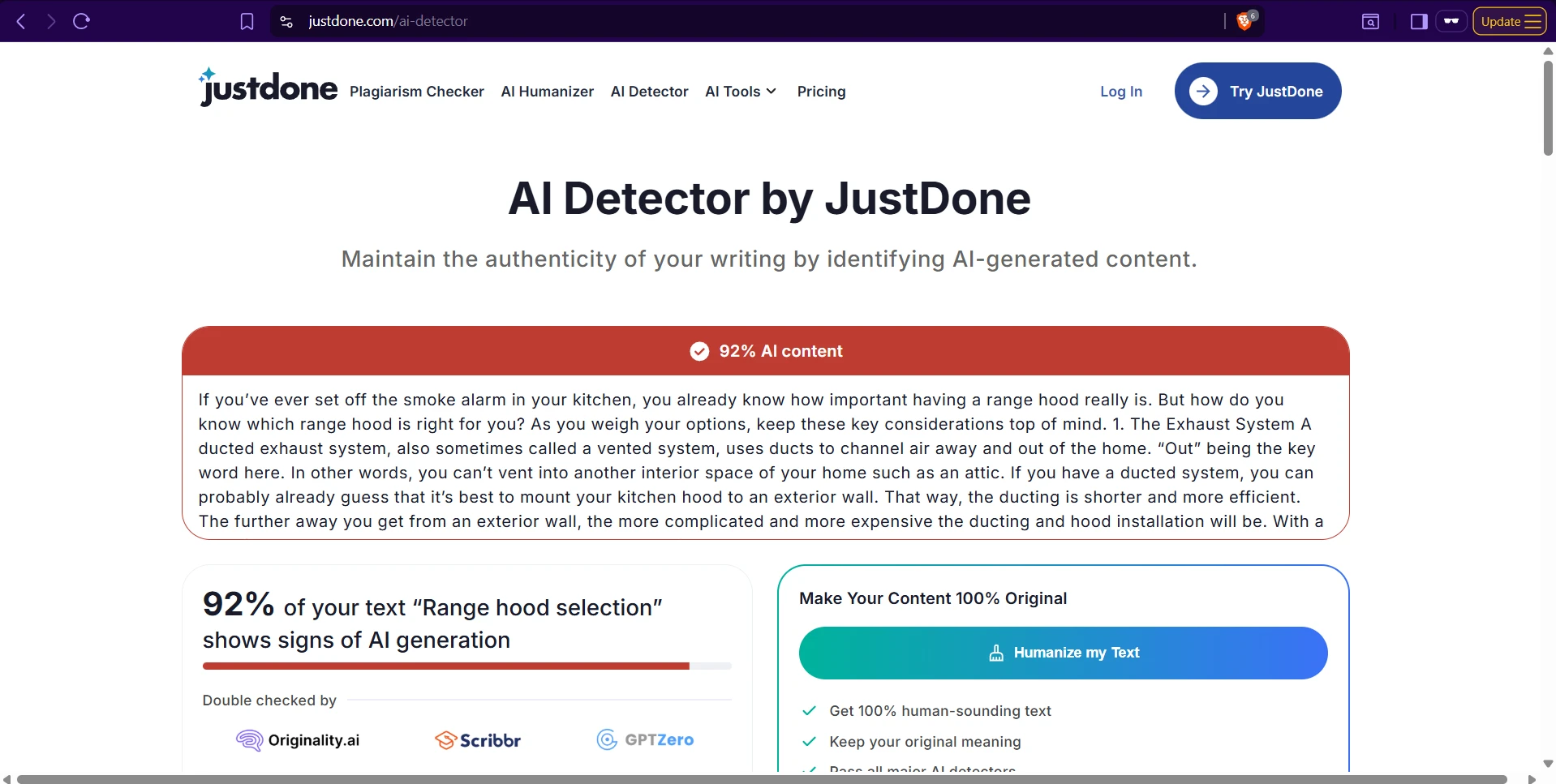

As we all know it, AI content detectors are being used more and more frequently to decide if a piece of text is written by a human or by an AI language model. JustDone AI Detector gives something called an “AI Score” ranging from 0 to 100, where a higher score means it is more likely to be AI-generated. But how does it perform when put to the test on a dataset of 160 texts (82 AI-written and 78 human-written)? Let’s find out.

Also Read: Is ZeroGPT A Good AI Detector?

The dataset

We tested 160 texts out of which 82 were ground-truth AI and 78 were ground-truth human. This is nearly a 50-50 split, so it’s pretty balanced. Each text had three key things:

- The complete text content.

- The “Written By” label (“AI” or “Human”).

- The JustDone AI Score (0–100).

Also Read: How Accurate is Turnitin?

First look at the scores

So, here’s what we found. The AI-written texts (82 total) had an average (mean) AI Score of around 85.34, with a standard deviation of about 8.62, a minimum score of 70, and a max of 100. On the other hand, the human-written texts (78 total) had an average of roughly 85.06 and a standard deviation of 9.01, with the same min of 70 and max of 100.

If these statistical terms sound scary, you can think of the average (mean) as the center value, and the standard deviation tells how spread out or how varied the scores are. A bigger standard deviation means the scores are more scattered. Here, though, they’re both around 8–9, so they’re quite similar.

One big problem is that both AI and human text get scores between 70 and 100. Moreover, the averages are nearly identical (85.34 vs. 85.06). That means the score distribution is basically overlapping for both groups!

We also measured correlation between “AI vs. human” and the score. It turned out to be around 0.02—basically no relationship, like random noise.

Also Read: How Accurate Is GPTZero?

Thresholding the AI Score

JustDone AI Detector lets you set a threshold T on the score. If a text’s AI Score is T or more, it’s flagged as AI; otherwise it’s labeled human. We found that the threshold T = 76 gave the maximum overall accuracy on this dataset.

Confusion matrix at T = 76

- True AI texts (82 total):

- 71 are correctly flagged as AI (True Positives)

- 11 are misclassified as human (False Negatives)

- True Human texts (78 total):

- 17 are correctly flagged as human (True Negatives)

- 61 are misclassified as AI (False Positives)

Performance metrics

Here are the main performance numbers at T = 76:

- Accuracy: 55% (correct flags out of total texts)

- Precision: 53.8% (of those flagged AI, how many were truly AI)

- Recall: 86.6% (of all AI texts, how many were caught)

- F1 Score: 66.4% (harmonic mean of precision and recall)

- Balanced Accuracy: 54.2% (average recall for both classes)

- ROC AUC: 51.4% (50% is random guessing)

Also Read: How Accurate is Winston AI?

Practical impacts

- A big chunk of human text gets flagged as AI (~78% false positives!). This means if you rely purely on JustDone, there is a massive risk of labeling genuine human authors as cheaters.

- The detector lumps pretty much everything into “AI-like” territory. All texts scored high (70–100), with nearly identical averages for AI and human.

- While it does catch about 87% of AI text, that comes at a huge cost: massively flagging legitimate work.

Guidelines on how to use it responsibly

The takeaway is that you shouldn’t treat the JustDone AI Score as ultimate proof that something was AI-generated. Treat it more like an indicator—if it’s suspiciously high, consider a manual review by:

- Checking the author’s writing or style in other works.

- Asking for process evidence like drafts or timestamps.

- Getting a domain expert to see if the text has strange leaps in logic.

Most importantly, it’s not a good idea to fail students or reject scholarship applicants purely based on JustDone’s AI Score.

Limitations

Keep in mind this analysis is based on a single dataset of 160 texts with unknown sampling. Your use case might differ. Also, JustDone may update its model in the future, so results can change.

The Bottom Line

The short answer is that JustDone AI Detector is not accurate enough. While it catches a high percentage of AI-generated text (high recall), it also flags a massive portion of real human writing as AI.

If you’re in a scenario where false accusations have serious consequences, avoid relying on JustDone alone. Use it only as an extra piece of evidence, combined with other knowledge and context. Relying on it blindly is a recipe for trouble, especially when real human writers can get accused of using AI.

![[HOT] Is JustDone AI Detector Accurate?](/static/images/is-justdone-ai-detector-accuratepng.webp)