As we all know it Quillbot is widely recognized for helping with rewriting tasks, summarizing text, or even just brushing up your grammar. However, can we really trust Quillbot’s AI detector to accurately figure out which text is written by a human and which one is AI-generated? The short answer is “Maybe.” The longer answer is that there’s a lot you need to see, so keep reading to find out all about it.

Why Quillbot’s AI Detector might not be fully reliable?

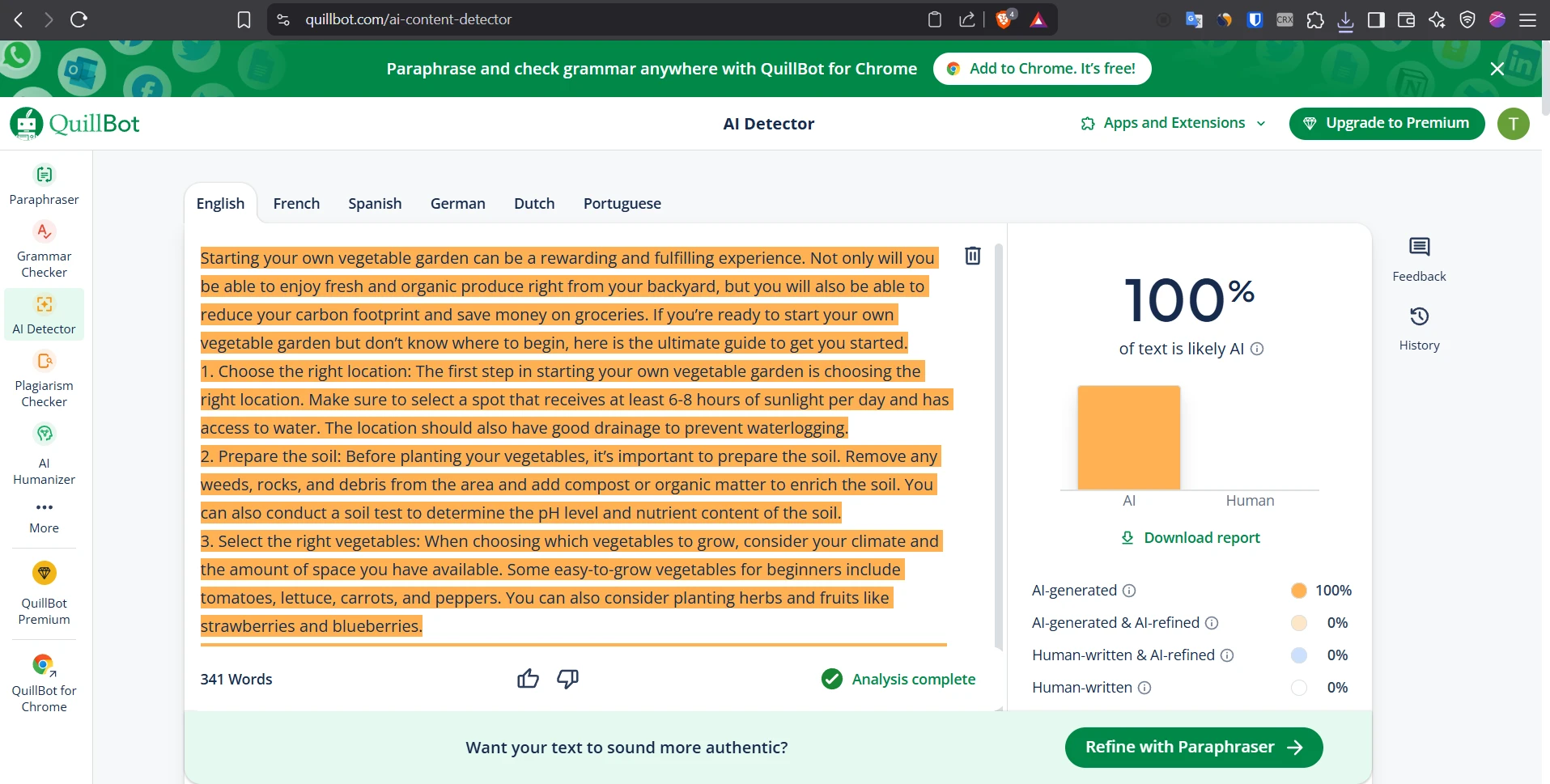

The thing is that Quillbot’s public AI Content Detector (tested on 6th June 2025) prioritises avoiding false alarms on real human text. That means it rarely flags something as AI if it is actually written by a human. This sounds good in principle, but it comes with a serious drawback: the detector ends up missing a big chunk of real AI text too. So, it is basically tuned in such a way that it’s extremely cautious before calling your text “AI,” but that also means many AI passages slip through undetected.

You can also ready my post on Quillbot's effectiveness against Copyleaks.

Our experiment design (brief overview)

We took 160 short passages and here is the raw data:

- 82 were entirely authored by ChatGPT (GPT-4o).

- 78 were actually written by humans (college essays & tech blog drafts).

The real author of each passage gave the ground-truth label “AI” or “Human.” Then we uploaded each piece into Quillbot’s AI Content Detector (web version). We noted Quillbot’s verdict: “AI Content Detected” or “Human-Written.”

Here is how we measured everything:

- Accuracy → Overall fraction of correct predictions.

- Precision → Among all passages flagged as AI, how many were really AI?

- Recall → Among all AI passages, how many did Quillbot catch?

- F1 Score → Harmonic mean of precision and recall.

Headline numbers

- Overall accuracy = 80 % (128 out of 160 passages)

- Precision on AI class = 1.00 (Quillbot never wrongly flagged a human passage as AI)

- Recall on AI class ≈ 0.61 (Meaning Quillbot missed ~39% of actual AI passages)

Full classification report

Below is our classification report, you can quote or embed it wherever you want:

| Class | Precision | Recall | F1 Score | Support |

|---|---|---|---|---|

| AI | 1.000 | 0.610 | 0.758 | 82 |

| Human | 0.709 | 1.000 | 0.830 | 78 |

| Overall accuracy | 0.800 | |||

Confusion matrix (with a plain-language explainer)

| Actual Predicted | AI | Human | |

|---|---|---|---|

| AI text | 50 | 32 | ← false-negatives (missed) |

| Human text | 0 | 78 | ← true-negatives |

– False negatives (32) – These passages were AI, but Quillbot’s detector said “Human-Written.”

– False positives (0) – Fortunately, we never encountered human text that got flagged as “AI.”

Interpretation

- Ultra-conservative tuning. Quillbot basically avoids accusing real human writers. Precision is perfect, but that also means missing a lot of AI text (39%).

- Practical tip: A “Human-Written” result is not a proof of originality. If your stakes are high, you might want to combine Quillbot with other signals or style checks to be sure.

Technical appendix

If you want to see the Python code that was used to compute the metrics, you can peek at the snippet below:

Python code used for metrics

import pandas as pd

from sklearn.metrics import classification_report, confusion_matrix

df = pd.read_csv("Quillbot Accuracy Test.csv") # columns: text, label, quillbot_pred

print(classification_report(df["label"], df["quillbot_pred"], digits=3))

print(confusion_matrix(df["label"], df["quillbot_pred"]))

Metric definitions

- Precision = TP / (TP + FP) → “Of everything flagged as AI, how many are truly AI?”

- Recall = TP / (TP + FN) → “Out of all AI texts, how many did we catch?”

- F1 = harmonic mean of Precision & Recall.

- Accuracy = (TP + TN) / total.

Limitations & future work

1. Balanced but smallish corpus (160 passages). The results might shift if you feed it long or super domain-specific text.

2. Binary verdict only. Quillbot also shows a probability percentage, so maybe messing with the threshold might improve recall.

So can we trust Quillbot’s AI Detector?

Quillbot’s AI detector is super safe if your top priority is “Don’t accidentally flag a human writer as AI.” However, if you want to be 100% sure that no AI text passes, then Quillbot alone will fail you as it missed around 2 in 5 AI passages. My personal opinion is that it’s not enough to rely solely on Quillbot if your main objective is ensuring authenticity. You might want to combine it with other signals or do some style analysis, especially if you are dealing with high-stakes tasks like university essays or serious research.

TL;DR for your readers

Quillbot’s AI detector tries really hard to never point fingers at innocent human writing, but it also lets about 40% of AI text slip by. So a “Human-Written” tag from Quillbot is weak evidence—don’t treat it as a guarantee.

The Bottom Line

Quillbot surely does a decent job in not misidentifying genuine human writing, but if your aim is to weed out all traces of AI, you should definitely tread carefully and look for more robust solutions or pair Quillbot with additional style checks or plagiarism scanners. If you’re relying purely on that single “Human-Written” verdict, then it might not be wise. After all, 32 AI passages in our test set managed to slip right through Quillbot’s net.