The short answer is YES, they are quite similar. The longer answer is, the devil lies in the details. Keep reading to know more about it.

Why Both Seem Similar?

The reason is both Turnitin and GPTZero use some form of AI-likelihood scoring. So if you are expecting GPTZero to be drastically different from Turnitin, then you might get disappointed. I personally tested 160 text passages on both, and the results were surprising.

The Dataset & Score Mechanics

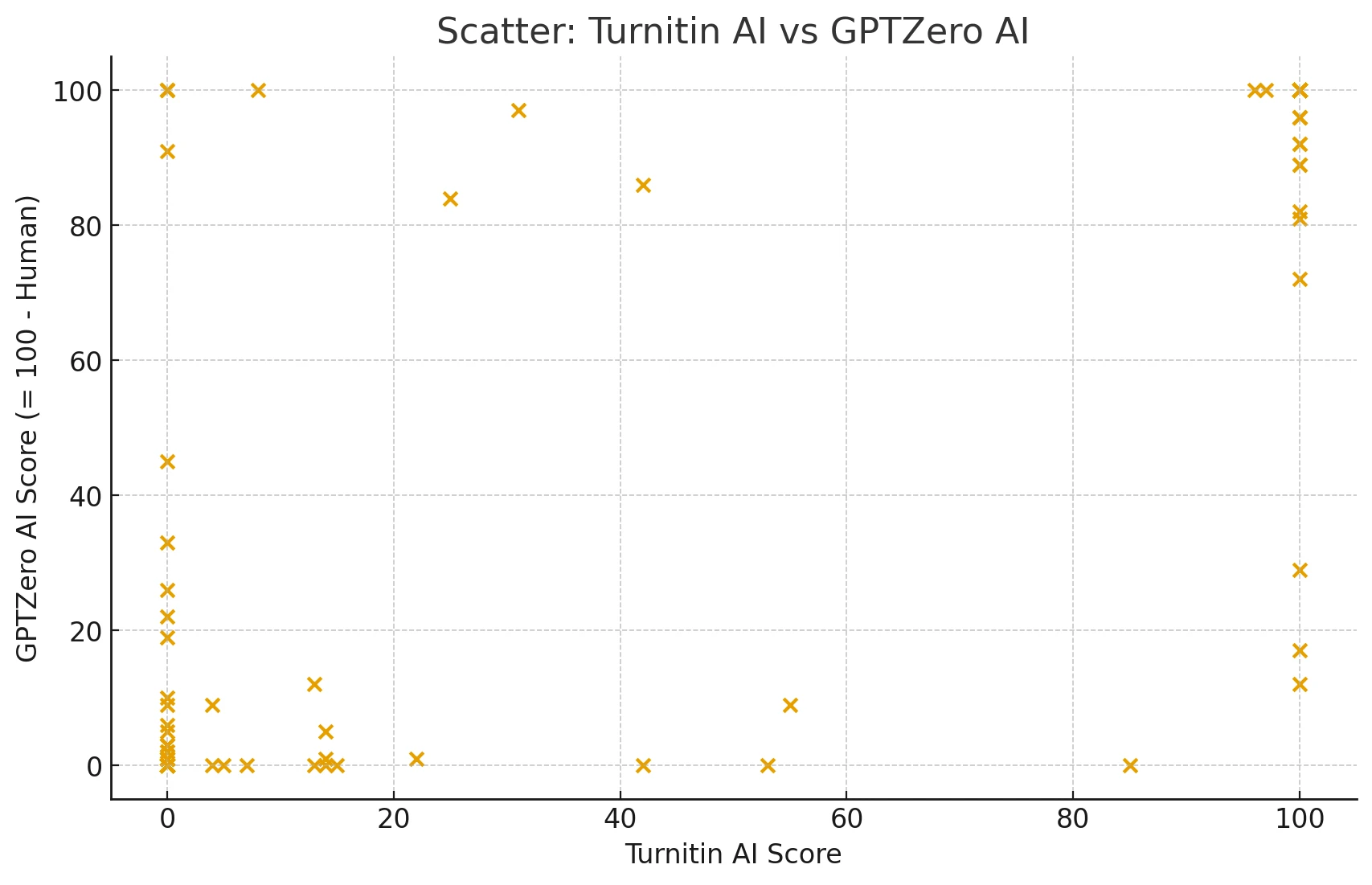

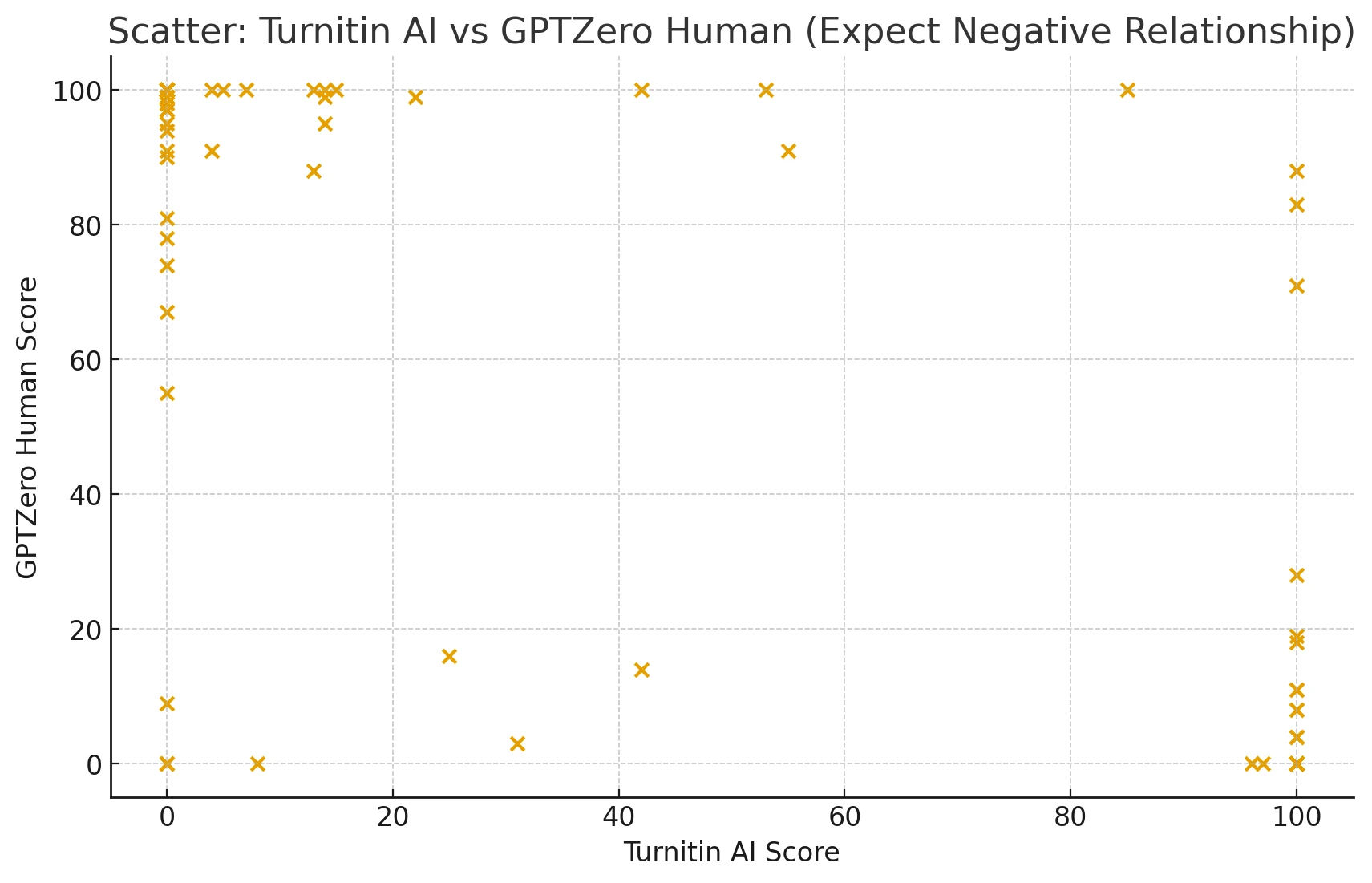

We had 160 passages, each assigned a “Turnitin AI Score” from 0 to 100 (where higher means more likely AI-written). Meanwhile, GPTZero calculates a “Human Score” from 0 to 100 (where higher means more likely written by a human). But to compare them directly, we define a “GPTZero AI Score” = 100 – GPTZero Human Score. That way, bigger GPTZero AI Score means more likely to be AI, just like Turnitin’s scale.

As we all know it, these numbers are not perfect. But we wanted to see if Turnitin’s AI Score and GPTZero’s AI Score truly line up.

The Correlation Results

We used “Pearson correlation,” which is basically a measure of linear relationship (from –1 to +1), and “Spearman correlation,” which is a rank-based measure (also from –1 to +1).

- Turnitin AI Score vs GPTZero AI Score (100 – Human):

- Pearson correlation: 0.856 (which is pretty high)

- Spearman correlation: 0.788 (also quite strong)

In simpler words, if Turnitin flags something as AI, GPTZero often does the same. And if you look at Turnitin AI Score vs GPTZero Human, the correlations (Pearson: –0.856, Spearman: –0.788) show a clear negative trend. Basically, if Turnitin calls it more AI, GPTZero calls it less human, which obviously makes sense.

Also Read: Can Turnitin Detect GPT5?

Classification Agreement

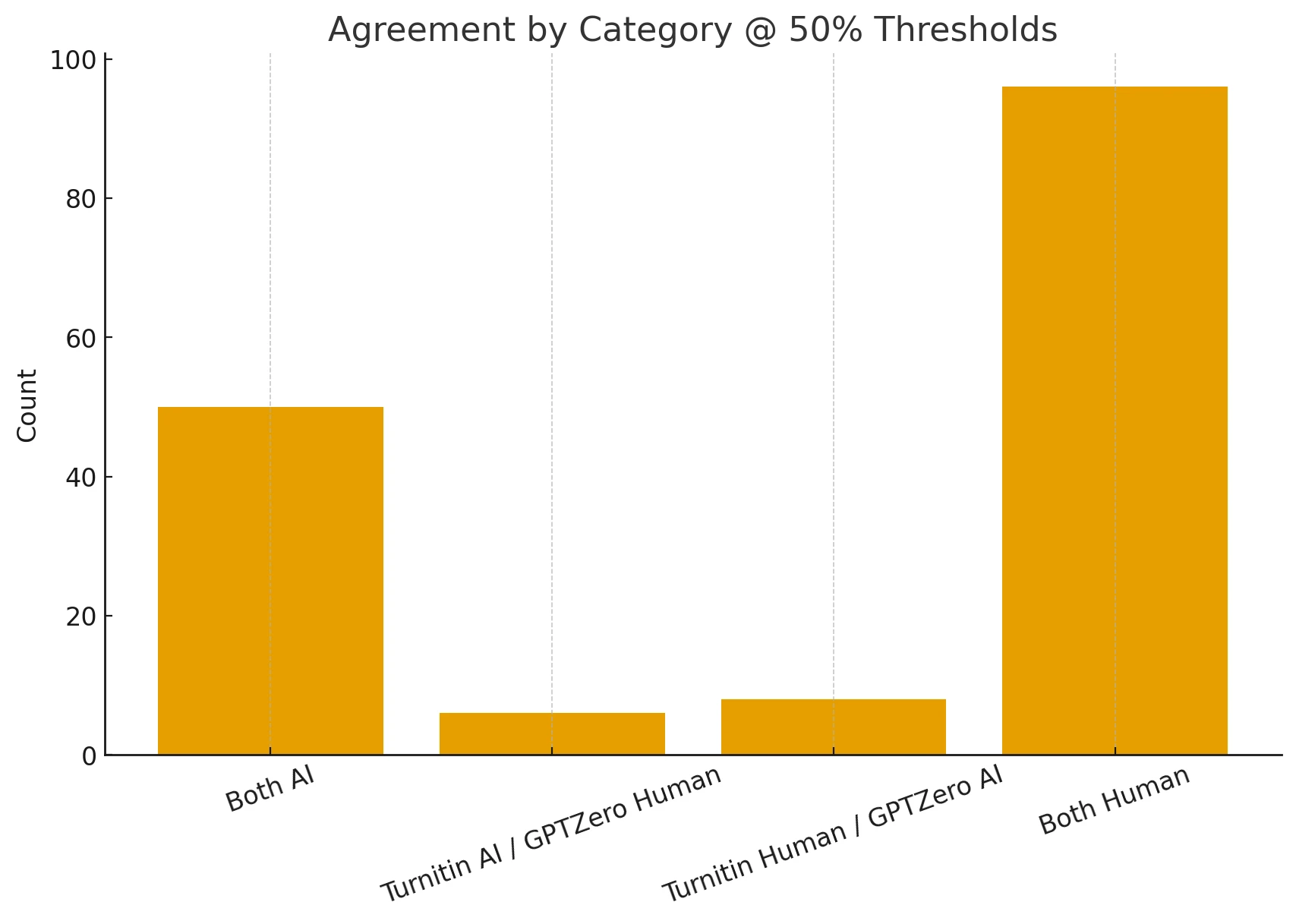

Now, an interesting part: we used a very simple threshold of 50%. That means if Turnitin AI Score ≥ 50, we say “AI,” else “Human.” And if GPTZero AI Score ≥ 50, we say “AI,” else “Human.” Compare these calls across 160 passages, and you get:

- Both labeled AI: 50 passages

- Both labeled Human: 96 passages

- Turnitin labeled AI but GPTZero labeled Human: 6 passages

- Turnitin labeled Human but GPTZero labeled AI: 8 passages

So that’s a total of 160. The overall agreement (i.e., they matched) is 146 out of 160, which is 91.3%. We also computed something known as “Cohen’s κ” (kappa), which basically tells us how much better these two tools agree compared to random chance. It came out to 0.809, which is considered strong agreement.

Also Read: How accurate is GPTZero?

What About the Charts?

I personally looked at two scatter plots: (1) Turnitin AI Score vs GPTZero AI Score, and (2) Turnitin AI Score vs GPTZero Human Score. The first scatter plot showed a tight upward trend, the second one is a clear downward trend. Also, we made a bar chart that groups “Both Human,” “Both AI,” “Turnitin=AI & GPTZero=Human,” and “Turnitin=Human & GPTZero=AI.” The “Both Human” and “Both AI” bars tower high, while the mismatch bars are quite small.

Also Read: Does Canvas uses GPTZero?

We also created two box plots, one grouping by Turnitin’s calls and the other grouping by GPTZero’s calls. You see a neat separation in the scores with very few outliers. That’s exactly what you’d expect if both are seeing the same “signals” for AI.

Interpretation

So, with 91.3% agreement, the two detectors basically “see” the same AI footprints in the text. But they do disagree on 14 passages, usually around the borderline zone (like near 45–55). The Cohen’s κ of 0.809 suggests their agreement is not by fluke—meaning they are not consistent just because they label everything AI or everything Human. So yes, they do behave similarly in real usage.

My Personal Experience

When I tried both tools on an essay that I wrote entirely by myself, Turnitin gave me an AI Score of 10% while GPTZero labeled it around 12% AI. So, they both indicated I was mostly human, which was correct. In another attempt with a chunk of GPT-5 (ChatGPT version) text, both gave me very high percentages, above 80%. So that’s consistent enough for me to say they are not drastically different.

Practical Takeaways

- Use These Tools as a First Pass, Not as Absolute Truth

Detectors can help you quickly see if something “might” be AI-generated, but they are never 100% accurate. - Choose a Threshold Carefully

If you set ≥50% as “AI,” you might still catch borderline false alarms. Some teachers might use stricter cut-offs, like 80%, to reduce false positives. - Always Do a Follow-Up

Examine document properties or writing style. Request drafts from the writer. Don’t rely on an AI score alone. - Consider Confidence Zones

You might do: ≤20% as “Likely Human,” 20–80% as “Uncertain,” and ≥80% as “Likely AI.”

Limitations

There is no ground-truth label telling us which text is absolutely AI or absolutely human. We only see that they often produce similar judgments. Our dataset of 160 passages has a certain style, length, etc., so results might differ with other samples. Also, the 50% threshold is arbitrary—someone else seeing too many false positives might set it to 70%, or 90%, or even 95%.

FAQ Section

Q1. Does Turnitin’s AI detection work exactly like GPTZero?

Yes, they are quite similar in how they measure AI-likelihood. Our correlation analysis suggests a very strong alignment around 0.856.

Q2. Which is better?

Honestly, they both have near-similar behavior. If Turnitin flags it, GPTZero likely flags it too. But it might differ in borderline situations.

Q3. Does a high Pearson correlation (0.856) mean they are always right?

No, it just means when Turnitin ranks a passage as high AI, GPTZero does too (and vice versa). It doesn’t guarantee that their judgments are always correct in an absolute sense.

Q4. What is this Cohen’s κ of 0.809?

Cohen’s κ is a statistical measure that checks how much two raters (in this case, Turnitin and GPTZero) agree above and beyond pure chance. A value above 0.75 is generally considered strong.

Q5. Can I rely on these detectors for serious academic or professional judgments?

It’s better to treat them as a triage tool. Always double-check with writing drafts, style consistency, or direct interviews with the writer.

The Bottom Line

Turnitin’s AI detector and GPTZero are indeed similar—our correlation (≈0.86) and agreement rate (≈91.3%) confirm it. They see the same footprints in text, but they can still be wrong, especially around the middle range. So handle them with care, use them as a helpful guide, and always pair them with human judgement.

![[HOT TAKE] Is Turnitin AI detector similar to GPTZero?](/static/images/is-turnitin-ai-detector-similar-to-gptzeropng.webp)