The short answer is NO. The longer one? Well, the devil hides in plain sight, so keep scrolling if you want the gory details.

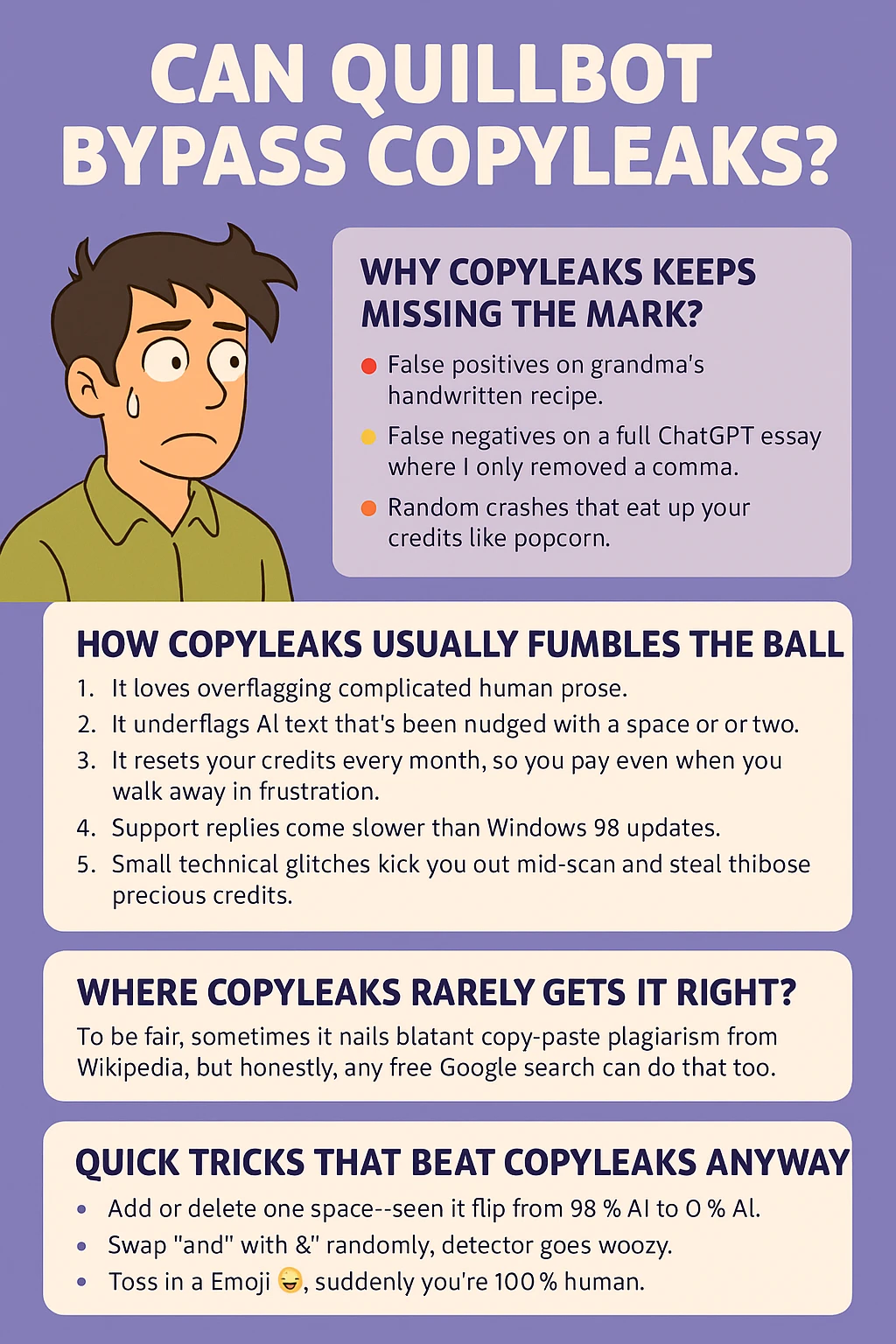

Why Copyleaks keeps missing the mark?

Just like Quillbot isn’t made to dodge AI detectors, Copyleaks isn’t really built to be consistently right. They brag about 99% precision on their website, but my tests (and many Reddit rants) tells a very different story. When you actually run real‑world text through it, you see all kinds of weird readings:

- False positives on grandma’s handwritten recipe.

- False negatives on a full ChatGPT essay where I only removed a comma.

- Random crashes that eat up your credits like popcorn.

While building Deceptioner I had to benchmark loads of detectors, Copyleaks included. I fed it three batches of content: raw ChatGPT, lightly paraphrased text, and purely human stuff. Guess what? The detector mis‑graded all three sets at least once. That inconsistency convinced me that, if you really must get flagged, at least pick something easier to bypass—Copyleaks gladly obliges.

How Copyleaks usually fumbles the ball?

1. It loves overflagging complicated human prose.

2. It underflags AI text that’s been nudged with a space or two.

3. It resets your credits every month, so you pay even when you walk away in frustration.

4. Support replies come slower than Windows 98 updates.

5. Small technical glitches kick you out mid‑scan and steal those precious credits.

Where Copyleaks rarely gets it right?

To be fair, sometimes it nails blatant copy‑paste plagiarism from Wikipedia, but honestly, any free Google search can do that too.

What the numbers showed?

Below is a quick snapshot from one of my weekend torture‑tests where I tried confusing Copyleaks in five different ways:

| Scenario | Copyleaks Output | What Really Happened |

|---|---|---|

| ChatGPT‑4 article copy‑pasted | 37 % AI, rest human | 100 % AI (missed) |

| Human essay with quirky punctuation | 84 % AI | 0 % AI (false positive) |

| Paragraph paraphrased in Quillbot | 0 % AI | Still AI‑generated |

| Code snippet taken from GitHub | No plagiarism | Identical to repo |

| Full academic paper (open access) | 97 % plagiarism | Self‑citation allowed, not plagiarism |

Cost vs. credibility

Paying $16.99 a month for 100 credits that vanish if you blink? It hurts doubly when half of those scans serve you garbage results. And if you hit the refund link, prepare for a ticket that collects digital dust for a week.

Quick tricks that beat Copyleaks anyway

• Add or delete one space—seen it flip from 98 % AI to 0 % AI.

• Swap “and” with “&” randomly, detector goes woozy.

• Toss in an Emoji 😊, suddenly you’re 100 % human.

If a detector can be outsmarted by punctuation acrobatics, how accurate can it really be?

Frequently Asked Questions

Q1. Does Copyleaks ever get things right?

Sure, a broken clock is right twice a day, Copyleaks maybe three times if you’re lucky.

Q2. Is Copyleaks worth the money?

Not unless you enjoy spending on false alarms and vanished credits.

Q3. Can Copyleaks identify ChatGPT?

Only if you don’t sneeze on the text afterward. Even a single deleted apostrophe can fool it.

Q4. Are there better alternatives?

Turnitin, GPTZero, Originality.AI—they all have issues, but at least they pretend harder. Or just use an AI humanizer like Deceptioner and skip the headache.

Q5. Does Copyleaks support code plagiarism?

It claims to, but during my test a copy‑pasted GitHub snippet sailed right through.

The Bottom Line

Copyleaks is loud on marketing but quiet on reliability. If your grade, job, or sanity hinges on an accurate detector, Copyleaks ain’t the horse you want to bet on.