As we all know, AI detection in research papers is one of those topics that gets everyone buzzing. The short answer is: it's a mixed bag. The longer answer is that while these detectors are supposed to help maintain academic integrity, the devil really lies in the details. Keep reading to know more about it.

Why AI Detection is a Two-Edged Sword?

The simple truth is that many research papers nowadays are getting a little extra AI assistance - mostly through free tools like ChatGPT (not the fancy, pricey subscription ones). But here’s the catch: most educators and students rely on these free versions, and it turns out that many AI detectors aren’t really built to account for such everyday usage. They were originally developed post-November 2022 after ChatGPT’s debut to stop students from cheating. They operate by looking for text “tells” like:

- Lack of variation in sentence structure

- Overuse of certain words and conjunctions

- Predictable sentence patterns and paragraph lengths

In other words, if your research paper has that same robotic feel - even if it’s genuinely your work - there’s a good chance it might get flagged. And that, my friends, is a huge problem.

Different AI Detectors and Their Approaches

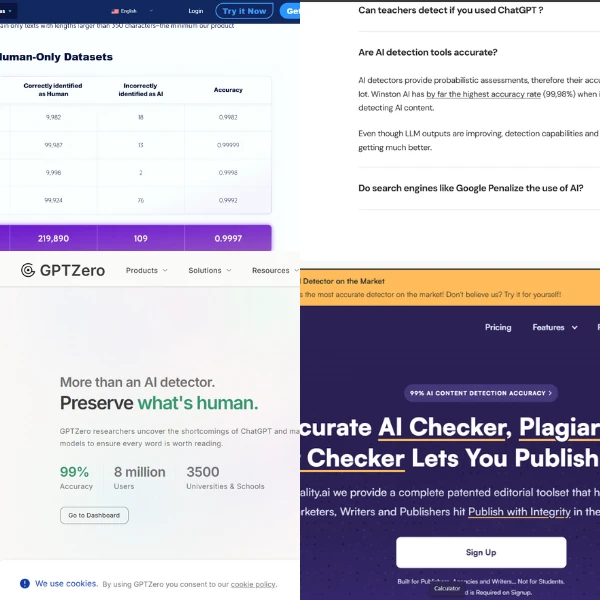

Now, let’s talk about the different AI detectors out there and how they work. There isn’t just one tool on the market - there’s a whole arsenal including Turnitin’s AI detection, GPTZero, Originality.ai, Copyleaks, Winston AI. Here’s a quick rundown:

Turnitin’s AI Detector: Originally known for plagiarism checking, Turnitin has integrated AI detection features that analyze sentence structures, keywords, and overall text patterns. It’s widely used in academic settings but sometimes flags genuine human work due to its reliance on pattern matching.

GPTZero: Designed specifically to catch AI-generated text, GPTZero has high accuracy claims (around 99% as touted by some), yet recent studies show that its accuracy can drop dramatically when minor adversarial tweaks are made.

Originality.ai: Marketing itself with numbers around 99% accuracy, Originality.ai uses metrics like burstiness and perplexity to detect AI text. Still, it isn’t foolproof—small edits can sometimes throw it off.

Copyleaks: Claiming upwards of 99.97% accuracy, Copyleaks also relies on a large database for comparison. But its figure might not reflect reality, especially for texts from non-native English speakers or those rephrased just slightly wrong.

Winston AI: Winston AI even claims around 99.98%. However, in practice, as AI models evolve, techniques to mask AI usage (like altering sentence structures or mixing outputs from different models) make their job a never-ending cat and mouse game.

Regardless of these impressive-sounding numbers, studies show that detection accuracy for untouched AI output can be as low as 39.6% and drops to roughly 22.3% when adversarial techniques are applied. And don’t get me started on false positives - a not-so-insignificant 15% of human-written content can be flagged as AI-generated. So, while these detectors provide a baseline, they’re hardly the be-all and end-all of academic verification.

The Rapid Evolution of AI vs. Detection Tools

AI models like OpenAI's o1, o3-mini, o1-pro, Anthropic’s Claude 3.5 Sonnet, Gemini 2.5 Pro are now capable of generating highly nuanced and human-like text. This evolution has turned the whole game into an arms race.

On one hand, we have AI generators backed by massive resources from OpenAI, Microsoft, and Google; on the other, the detection tools are still largely dependent on static patterns and basic statistical comparisons.

This gap means that even a slight tweak - a change in sentence length, a carefully introduced error, or mixing models - can easily bypass these detectors.

Equity Issues & Unintended Consequences

One of the most alarming outcomes is the inequitable impact these tools can have on students. Imagine four students using generative AI in different ways: one using free versions out of limited access, one relying on ChatGPT for translations (non-native English), another from a low socio-economic background using Meta Llama Models, and then a privileged student with access to advanced models.

Research shows that the detection tools frequently flag the less resourceful students with scores as high as 90% or 100%, whereas the privileged student might only get flagged at around 20% - even when all of them have intentionally used AI. This not only creates unfair academic consequences but also increases the workload on educators who must then review these false positives through stressful appeals and discussions.

Ethical and Privacy Concerns

There’s also a serious conversation to be had about ethics and privacy. Submitting your research paper to an AI detector might infringe on data privacy regulations -- a concern especially relevant for institutions like UNBC, where student work has strict privacy guidelines. Moreover, over-reliance on these tools can erode trust between students and instructors, shifting the focus from genuine academic inquiry to a blame game over digital fingerprints.

Frequently Asked Questions

Q1. How reliable are AI detectors for research papers?

A: Not very. With detection accuracy hovering around 39.5% (dropping further with minor tweaks) and false positive rates near 15%, it’s clear that these tools are only a rough guide.

Q2. Should educators solely rely on AI detection in evaluating research papers?

A: No. Relying only on these tools can lead to mistrust and additional workload. They should be used as a supplementary check, alongside traditional, context-sensitive evaluation methods.

Q3. Do AI detectors disproportionately target certain groups?

A: Yes, evidence points to non-native English speakers and students with less access to advanced AI tools being flagged more often than their privileged counterparts.

Q4. Is it allowed to use AI detection for research paper evaluation?

A: Technically, many institutions employ these tools to uphold academic integrity. However, given their limitations, they should never be the sole decider of a paper’s originality.

The Bottom Line

While AI detectors - like those integrated in Turnitin, GPTZero, and the rest - are marketed as foolproof guards against AI-generated content, they’re riddled with issues. They create inequitable academic environments, add undue burden on educators, and sometimes flag genuine work as AI-produced. Relying solely on AI detection is like trying to catch a moving target with a net full of holes.

The best approach is to use these tools as just one part of a broader evaluation strategy. Educators and institutions should focus on fostering ethical, transparent academic practices, boost AI literacy among both students and teachers, and re-think assessment methods that value genuine understanding over mere pattern recognition.

At the end of the day, while different AI detectors offer varied approaches and promising accuracy numbers, no tool is perfect. Use them cautiously, support detection with human judgment, and remember that real academic work is too nuanced to be fully captured by any algorithm.