As we all know it ChatGPT or Gemini gets pretty easily detected by Turnitin. However, is it the same for Claude AI? The short answer is YES. Now, you don't need to read any further than this but if you want to, then nobody is stopping you from doing it.

Why Claude AI gets detected by Turnitin?

The simple answer is just like ChatGPT, Claude AI is not made to bypass AI detectors. You can see this from their documentation and marketing materials. They never explicitly promote beating Turnitin as one of their features. Hence, if it is not made to accomplish this task, it won’t be able to do it.

In fact, if you dig deeper into its history you would realize that Anthropic (company behind Claude AI) was formed by ex-OpenAI employees who were not in agreement with OpenAI stance on AI safety. So, given this history I don't think Anthropic will lean towards making text generated by Claude undetectable.

On the contrary, they would go for making their model AI detector friendly, even if it makes their tool less favorable in the eyes of their users. Their mission statement revolves around AI safety, and most likely they would not compromise on it.

Claude is known for delivering coherent human-like text. But human-like is not necessarily “undetectable.” Turnitin has highly sophisticated AI detection algorithms. Whether you feed your essay into Claude in small bites or big chunks, Turnitin can compare the style plus structure with its known AI text patterns and quickly find matches.

Claude AI is also widely popular, which means Turnitin’s developers keep an eye out for typical text patterns it produces. So, even though Claude might produce text that looks less robotic, often the sentence formations or transitions are still recognized as AI patterns by Turnitin.

Also Read: Can Turnitin detect Gemini Advanced?

New Data: Turnitin’s Performance on Claude Versions

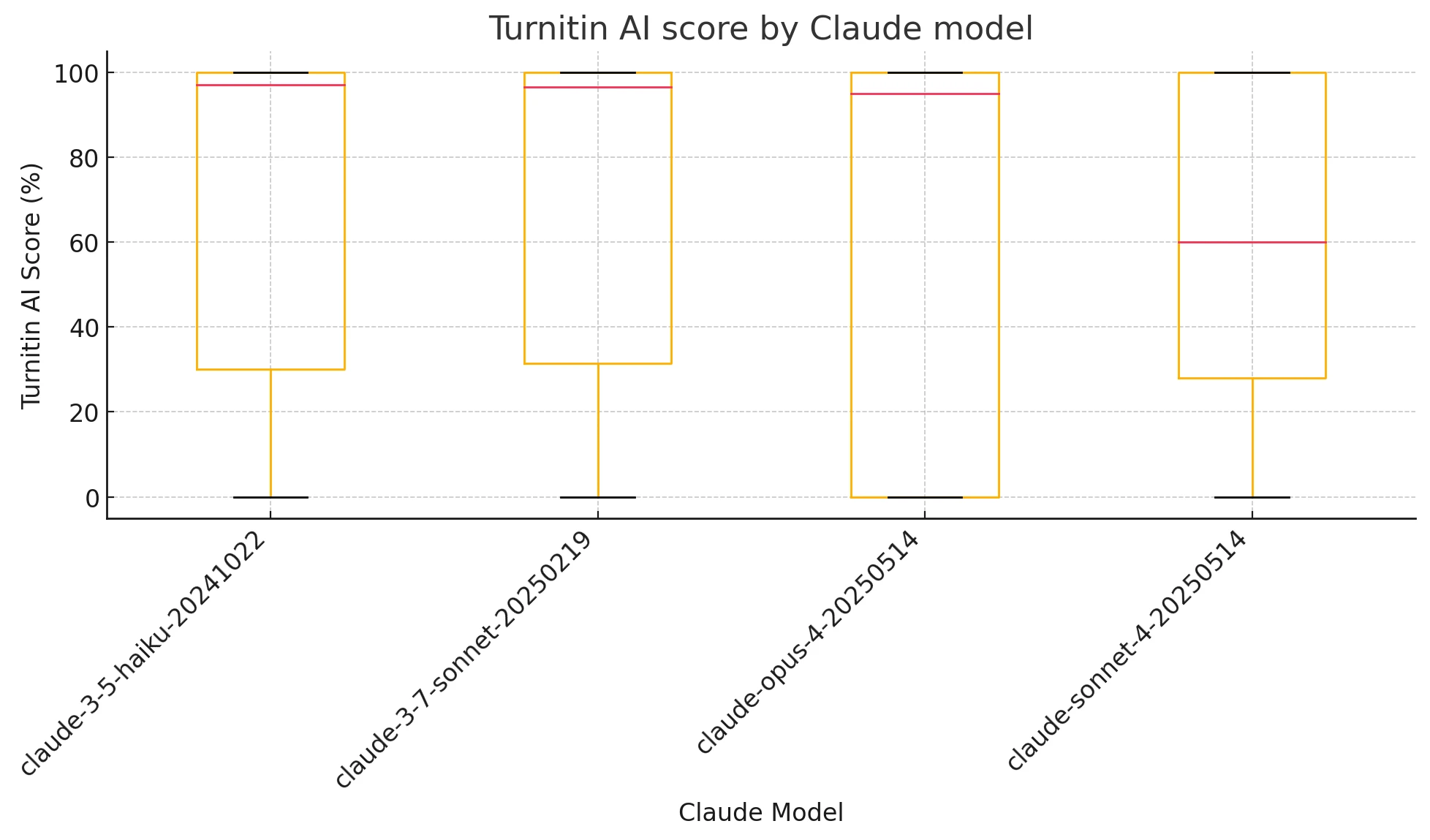

Recent tests show how Turnitin scores multiple generations from different Claude models. The results indicate that Turnitin's AI detection is still quite volatile and can miss a significant portion of Claude outputs when using the default 90% threshold. Here's a summary:

| Claude model | # texts | Mean score (%) | σ (%) | Flagged ≥ 90 % (%) |

|---|---|---|---|---|

| claude-3-5-haiku-20241022 | 34 | 65.3 | 40.9 | 52.9 |

| claude-3-7-sonnet-20250219 | 20 | 68.4 | 43.3 | 60.0 |

| claude-opus-4-20250514 | 21 | 65.2 | 44.2 | 57.1 |

| claude-sonnet-4-20250514 | 25 | 58.8 | 39.8 | 44.0 |

What the numbers and graphs tell us

- Wide score spread – Each Claude piece can receive anywhere from 0 % to 100 %.

- Median scores sit well below the “AI” threshold (50–70 % depending on model). Box-plot medians hover around 60 %.

- With the commonly cited 90 % cut-off, Turnitin catches only about half (∼53 %) of Claude texts.

- Lowering the threshold to 70 % pushes recall to ≈73 %, but still leaves > 1/4 undetected.

- Model-to-model differences are modest – No Claude version is consistently “invisible” or “obvious.”

Also Read: Can Turnitin Detect Grok AI?

Reliability verdict

Turnitin’s detector is not dependable as a yes/no gate for Claude-generated prose:

- High false-negative rate – about half of genuine AI texts slip through at the default 90 % cut-off.

- Score volatility – identical-quality generations can score 0 % or 100 %, which undermines repeatability.

- No view on false-positives – our sample lacks human writing, but prior peer-reviewed studies show non-trivial mis-labeling of real student work.

Recommendation: Treat Turnitin’s AI score as a weak heuristic, useful only when combined with human review and other metrics (writing style shifts, process evidence, revision history, etc.). Relying on the score alone risks both missed detections and unjust accusations.

My personal Experience

In my personal experience, Turnitin does catch a noticeable amount of Claude AI’s raw output, but the data above indicates it also misses close to half of it at the 90% threshold. I don't mean to say that you cannot do it with some AI humanization prompts or using dedicated AI bypasser like Deceptioner, but the point is that if you submit Claude's text as-is, Turnitin may often flag it – especially if it falls into the style patterns the detector recognizes.

Earlier in 2023, it might have been easier to slip past Turnitin with Claude AI’s raw output. But since Turnitin's last AI detector update in December 2023, it has become more challenging. The data clearly illustrates that detection rates are inconsistent; sometimes Turnitin flags a large portion, and sometimes it doesn't. Ultimately, any method of bypassing depends heavily on the constantly evolving nature of Turnitin’s AI detection.

Which tool is made to do this task?

There are many rewriters or AI text humanizers that claim to bypass AI detection. You could have a look at Undetectable.ai, Stealthwriter, Deceptioner, etc. The thing that differentiates these tools from the likes of Claude or ChatGPT is that they are purpose-built to add randomization or subtle language shifts specifically to outsmart AI detectors like Turnitin.

Although, none of them can guarantee 100% success all the time. Turnitin’s AI detection keeps getting updated, so it’s basically a cat and mouse game. You might get away once or twice, but it is not consistent. If you’re writing an important assignment, you need to be aware of the risks.

Detection Techniques Used by Turnitin

| Detection Technique | Description | Impact on Claude AI's Output |

|---|---|---|

| Linguistic Analysis | Evaluates text for nuanced and creative phrasing, vocabulary diversity, and natural style. AI-generated text often appears more generic, with overused words and consistent structures. | Even though Claude AI produces human-like text, its output can still lack the depth and variability of true human writing, making it more likely to be flagged. |

| Probability Models | Uses machine learning and natural language processing to break text into segments and statistically model the likelihood of AI generation through metrics like burstiness and perplexity. | Claude's text, due to its systematic patterns and consistency, is statistically distinguishable from naturally erratic and varied human writing. |

| Database Comparison | Compares submitted work against a vast repository of known AI outputs and academic texts to identify similarities in phrasing, structure, and content. | Given Claude AI’s reliance on common training data, its output may share recognizable patterns with other AI-generated content in the database, increasing detection probability. |

| Content Originality Checks | Cross-references text against existing sources. Because AI tools assemble content from pre-existing information, they often inadvertently match previously published texts. | Content generated by Claude AI may exhibit overlaps or unoriginal segments that Turnitin can cross-check, thereby lowering its originality score. |

Why is it easy for Turnitin to detect Claude AI?

Turnitin uses advanced machine learning algorithms to detect text that have suspiciously consistent structures or very low burstiness and perplexity. Claude AI, being an LLM, tends to generate text with hallmark patterns, no significant grammar mistakes, and a certain style that is less dynamic than real human writing.

One huge giveaway is that LLMs often avoid overly complex or ambiguous language; they tend to answer with confident, well-structured sentences. Turnitin likely zeroes in on these textual patterns, and it becomes easy for them to figure out that your content is not entirely human-generated.

Frequently Asked Questions

Q1. Does Claude AI show up in Turnitin?

Yes, Turnitin will easily detect Claude AI’s raw output. You can pass their plagiarism test sometimes, but it is extremely unlikely that you would pass their AI detection test unmodified.

Q2. Is using Claude AI plagiarism?

No, the mere act of using Claude AI to write is not plagiarism if you are synthesizing your own ideas. However, Turnitin may flag your text for AI generation if you don’t paraphrase carefully or provide references for factual content.

Q3. Can you bypass Turnitin with Claude AI if you reword it?

If you rely on basic paraphrasing or minimal rewriting, Turnitin will likely flag it. You can use specialized AI text humanizers like Deceptioner, but it’s still not foolproof.

Q4. Is Claude AI free to use?

Claude AI has free options but also offers more advanced features with a paid plan. The free plan might not be enough for large projects, especially if you’re rewriting huge chunks of text.

Q5. Could Claude AI go undetected by Turnitin in the future?

Turnitin’s developers are always updating their detectors, so it’s a moving target. If LLMs evolve to produce text that is more erratic and less “perfect,” maybe it could fool Turnitin for a while. But that is also just speculation at this point.

The Bottom Line

Claude AI is a fantastic tool for getting new ideas, clarifying concepts, or drafting an outline, but it is not reliable at all when it comes to bypassing Turnitin’s AI detection. If you want to stay safe, you either need to do serious manual rewriting (and cite your sources!), or you should consider using specialized rewriters that are dedicated to bypassing AI detectors. Just be aware that it’s an ongoing race - there is no absolute guarantee.