As we all know, Originality.ai claims to detect AI-generated text, but the big question is: can Originality.ai detect GPT-5 reliably? The short answer might surprise you. The longer answer is the devil lies in the details. Keep reading to know more about it.

Why does Originality.ai struggle with GPT-5?

The simple answer is it’s really not catching enough GPT-5 texts at the stricter thresholds that most people use. In our tests, Originality.ai flags only about 39% of GPT-5 samples at a 0.90 cutoff. Even if we lower the cutoff to 0.50, it only flags around 43%. Hence, if you think Originality.ai will catch all GPT-5 text, it won’t be able to do it. More than half of GPT-5 content just slips through unnoticed at thresholds most teams consider “safe.”

Dataset at a glance

Below is a quick overview of how we conducted our small experiment. We collected 101 GPT-5 generated texts (no human texts yet) with an average length of 526 words. Minimum word count was 387 and maximum was 994. When Originality.ai assigns an AI-score (0 to 100%), it basically indicates how sure the tool is that the content is AI-generated.

- Total samples: 101 GPT-5 texts (no human texts included)

- Average length: 526 words (min 387, max 994)

- AI-score scale: 0 – 100% (Originality.ai “AI-written” confidence)

- Overall mean AI-score: 42.0%

- 25th / 50th / 75th percentiles: 0.02% / 2.57% / 98.78%

- Min / Max AI-score: 0% / 99.95%

If you want a reliable way to bypass Originality.ai then you will need a dedicated AI humanizer like Deceptioner. Use the "Originality.ai" mode for better reliability.

Also Read: Can Turnitin Detect GPT5?

What the distribution tells us?

One surprising thing we found is that the detection scores cluster around two extremes: near 0 and near 100%. This is called a bimodal distribution. In plain English, it means we have lumps of GPT-5 texts that Originality.ai scores very low and lumps it scores very high, but not so many in the middle. This causes a tricky situation:

- If you set a low threshold (like 0.50) to catch those low-scoring GPT-5 texts, you might flag a bunch of actual human texts as AI (false positives). We can’t quantify that yet, because we still don’t have any human samples in the dataset.

- If you set a high threshold (like 0.90) to reduce incorrect flags, you’ll end up missing a big chunk of GPT-5 content that sits in the low-score area.

Hence, if you rely on Originality.ai at a high threshold, it won’t catch those GPT-5 texts scored near 0. And if you rely on a lower threshold, you may incorrectly flag many legitimate or human-written texts.

Also Read: Can GPTZero Detect GPT5?

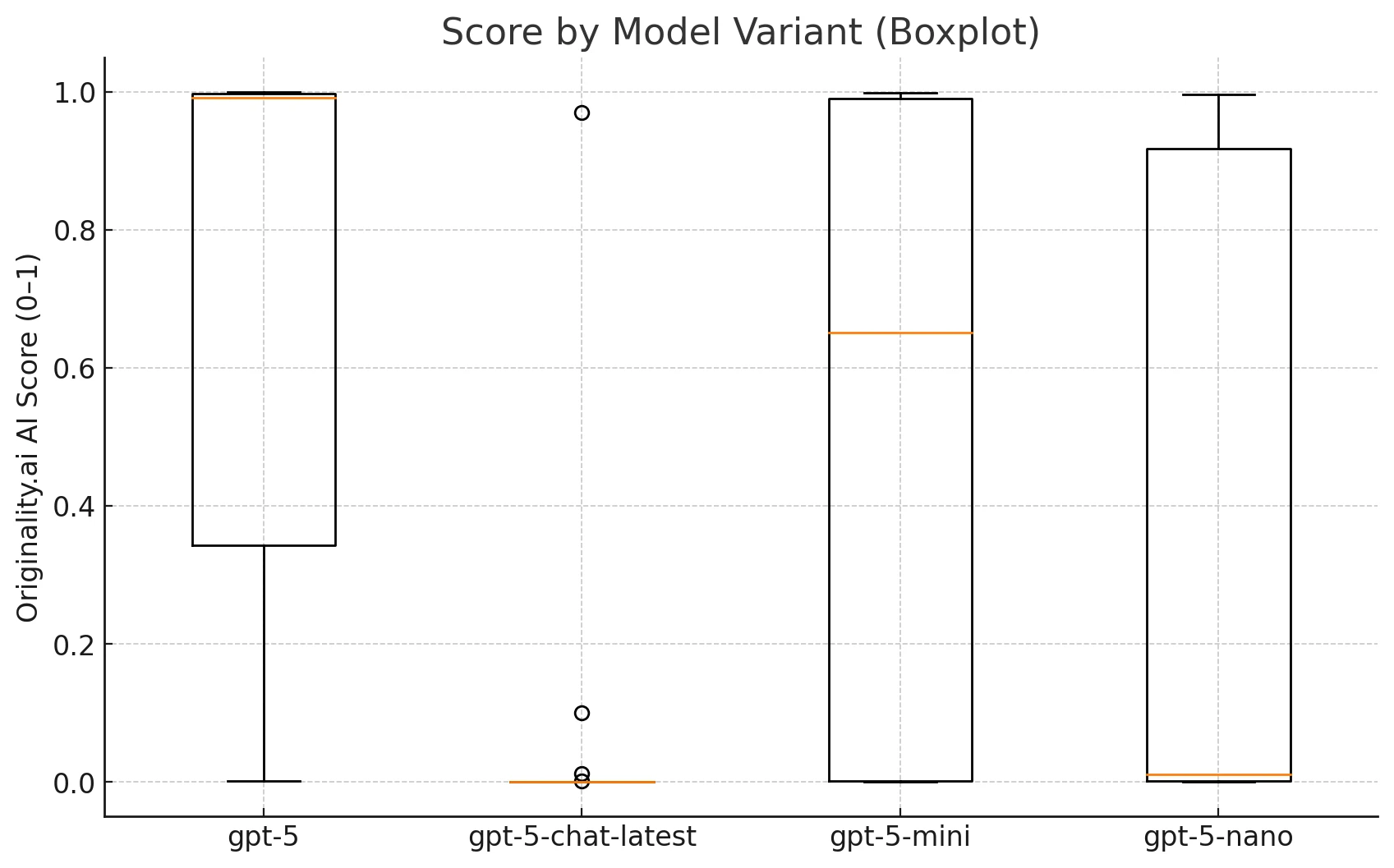

Model-variant behavior differs

GPT-5 has multiple variants and their detection patterns are not the same. We tested four of them:

| GPT-5 model variant | # samples | Share of set |

|---|---|---|

| gpt-5-mini | 28 | 27.7% |

| gpt-5 (base) | 26 | 25.7% |

| gpt-5-nano | 24 | 23.8% |

| gpt-5-chat-latest | 23 | 22.8% |

- gpt-5-mini and gpt-5-nano had higher median AI-scores, which means Originality.ai caught more of their outputs at stricter thresholds.

- gpt-5-chat-latest often scored near 0, making it really tough for Originality.ai to catch at any normal threshold.

- gpt-5 (base) was all over the map: some near 0, some close to 100%, and everything in between.

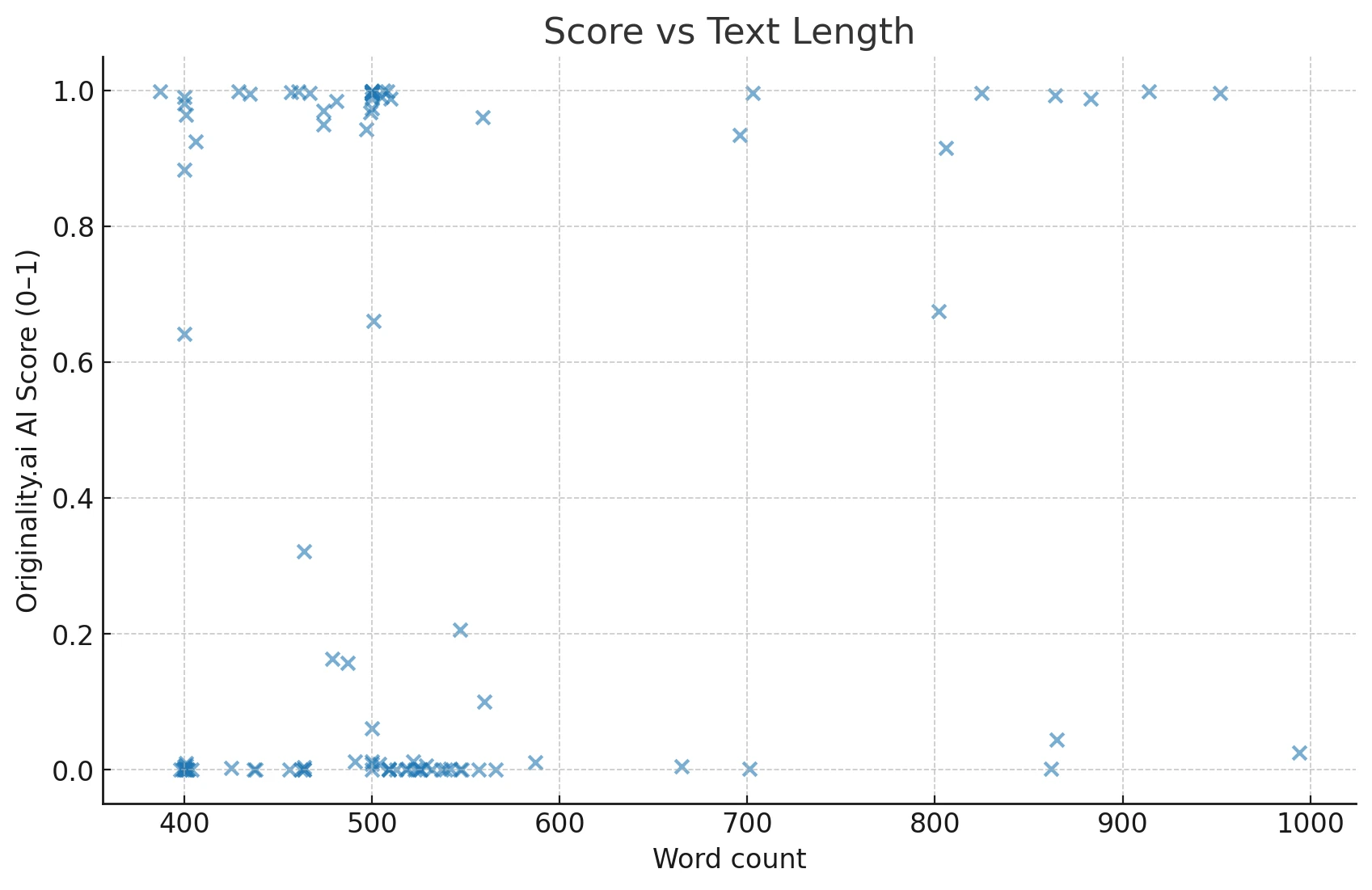

Text length isn’t driving detection scores

Some people think longer AI-generated text might be easier to catch. However, we checked word counts vs. Originality.ai’s scores, and the correlation was about 0.13 (very small). That simply means that whether your GPT-5 text is 400 words or 900 words, it doesn’t strongly affect how Originality.ai rates it. So, even if you instruct GPT-5 to produce shorter or longer passages, it’s not guaranteed to trick Originality.ai or help it.

Also Read: Can Winston AI Detect GPT5?

Practical guidance

- For enforcement: With recall roughly ~40% at the 0.90 threshold, Originality.ai simply isn’t reliable enough (on this data) to serve as a single pass/fail gate for GPT-5 detection. You could be missing the majority of GPT-5 content and might still flag legitimate human pieces if you play around with lower thresholds.

- If you must use it: Lower the threshold (for example, 0.50) so it flags more GPT-5 texts. But please note, this likely leads to many false positives for real people’s work. We can’t put a precise number on that yet because we haven’t tested with a matched set of human samples.

- Better approach: Use Originality.ai scores alongside other policies like checking user metadata, analyzing writing-process steps, measuring stylometry across multiple drafts, or even asking for short oral defenses if it’s an academic scenario. Basically, you want more signals than just an AI score.

- Next step for a full picture: We plan to add a batch of human-written texts on the same topics and with similar length. Then we can measure the real accuracy, precision, and recall. We’ll also compute a confusion matrix so you’ll see how often Originality.ai incorrectly flags things. That’s the only way to balance how many GPT-5 texts slip through vs. how many genuine texts get labeled as AI.

Frequently Asked Questions

Q1. Does Originality.ai detect GPT-5?

Yes, but not consistently. It identifies around 39% of GPT-5 texts at 0.90 threshold, which is definitely not enough if you want it to catch everything. If you loosen the threshold to 0.50, you get a tiny improvement (~43%), but it may produce more false alarms.

Q2. Why is it so uncertain in GPT-5 detection?

One big reason is the bimodal pattern. Many GPT-5 texts are scored near 0% and many near 100%, so adjusting the threshold up or down can either let half of the GPT-5 texts slip or cause normal human texts to be flagged.

Q3. Does text length affect Originality.ai’s detection?

No, not in any meaningful way. Our correlation (≈ 0.13) is very low, so you can’t rely on just writing shorter or longer paragraphs to pass or fail the test.

Q4. How to avoid Originality.ai if you are using GPT-5?

You can’t rely purely on length or rewriting. Originality.ai might still catch you if your text falls in the high-score region. On the other hand, it might miss you if you accidentally create text that’s near the 0% region. There’s no single trick here.

Q5. Should I rely solely on Originality.ai for AI detection?

No, you should not. Think of it as one tool in your toolbox. Checking writing-process attestations, doing stylometric analysis, or verifying references are all additional layers that help.

The Bottom Line

Originality.ai is a sophisticated tool, but when it comes to GPT-5 detection, it’s far from foolproof. You can’t take its word for it blindly because around half of GPT-5 texts can easily slip through. If you’re building any classroom or organizational policy around AI detection, make sure you combine multiple checks and not just rely on Originality.ai’s single number. AI detection is still in its early days, and both detectors and AI generators evolve constantly. So, it’s really a game of cat and mouse, and we’re still in the thick of it.